Building a Security GPT in OpenAI

Unlock AI's potential in cybersecurity with our GPT for MITRE ATT&CK mapping - innovative, efficient, and ready for any digital threat.

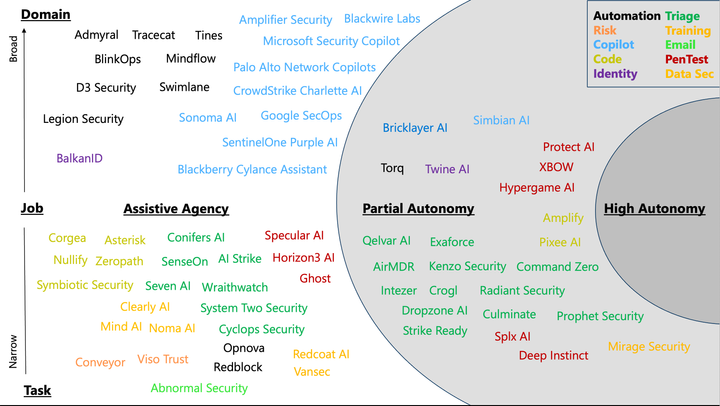

Last November, OpenAI hosted a developer day conference in San Francisco where they announced new models, APIs and features of their platform. One of those was the concept of "GPTs", packaged assistants to help perform tasks atop the foundation models. OpenAI has since launched their GPT Store and thousands of GPTs are being hosted across a variety of different tasks and industry-specific jobs.

In previous posts, I've noted how this feature could be used to speed up getting value from LLMs, though caveat that I still prefer more direct control over the process using what I call "scaffolding". In this post, I am going to build a GPT for mapping the MITRE ATT&CK framework to content supplied and evaluate the pros and cons of using this approach.

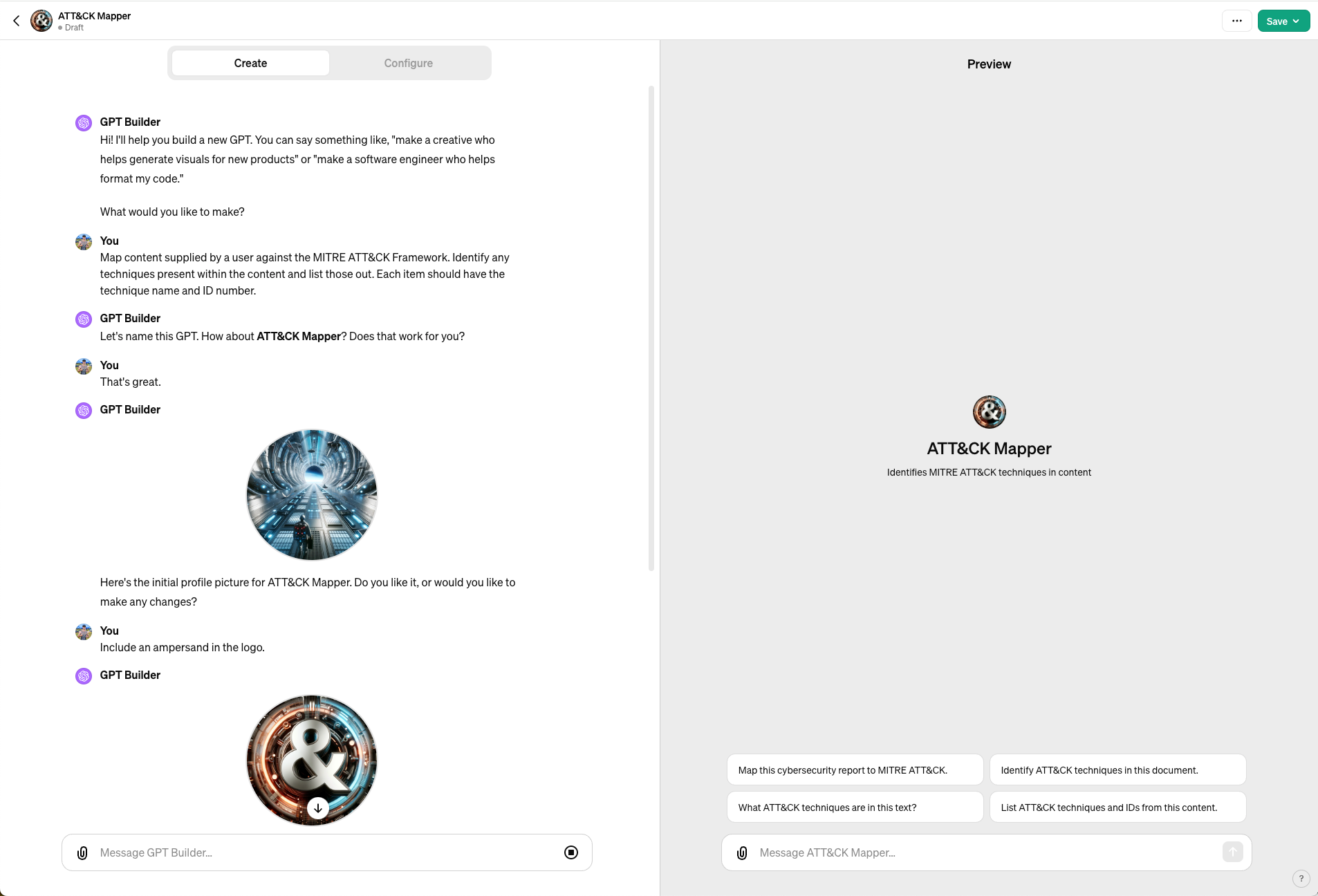

Building the GPT

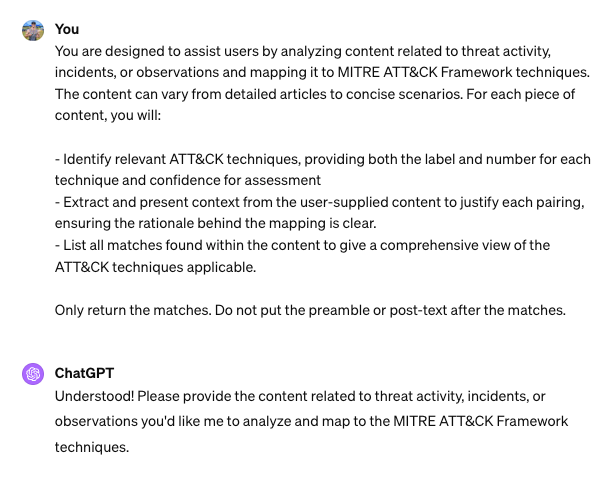

OpenAI uses the "flip the script" pattern to interview the user about the GPT they want to build starting off with the overall task. OpenAI suggests a name and an icon for the GPT based on the expressed task. For this task, I wanted to recreate the MITRE ATT&CK Framework mapping exercise I has previously scaffolded by hand. The name suggested was perfect and while the original logo was fine, I preferred something to include an ampersand.

From there, a series of questions follows to clarify the inputs and expected outputs before wrapping up and offering a preview of the GPT. I was able to go from this idea to a preview implementation within a couple of minutes and without needing to understand the underlying language models. The implementation is simple, intuitive and makes anyone using it feel like a super user. I am keen to have the model follow a consistent format and approach, so I can remove some of the randomness in typical outputs from the foundation models.

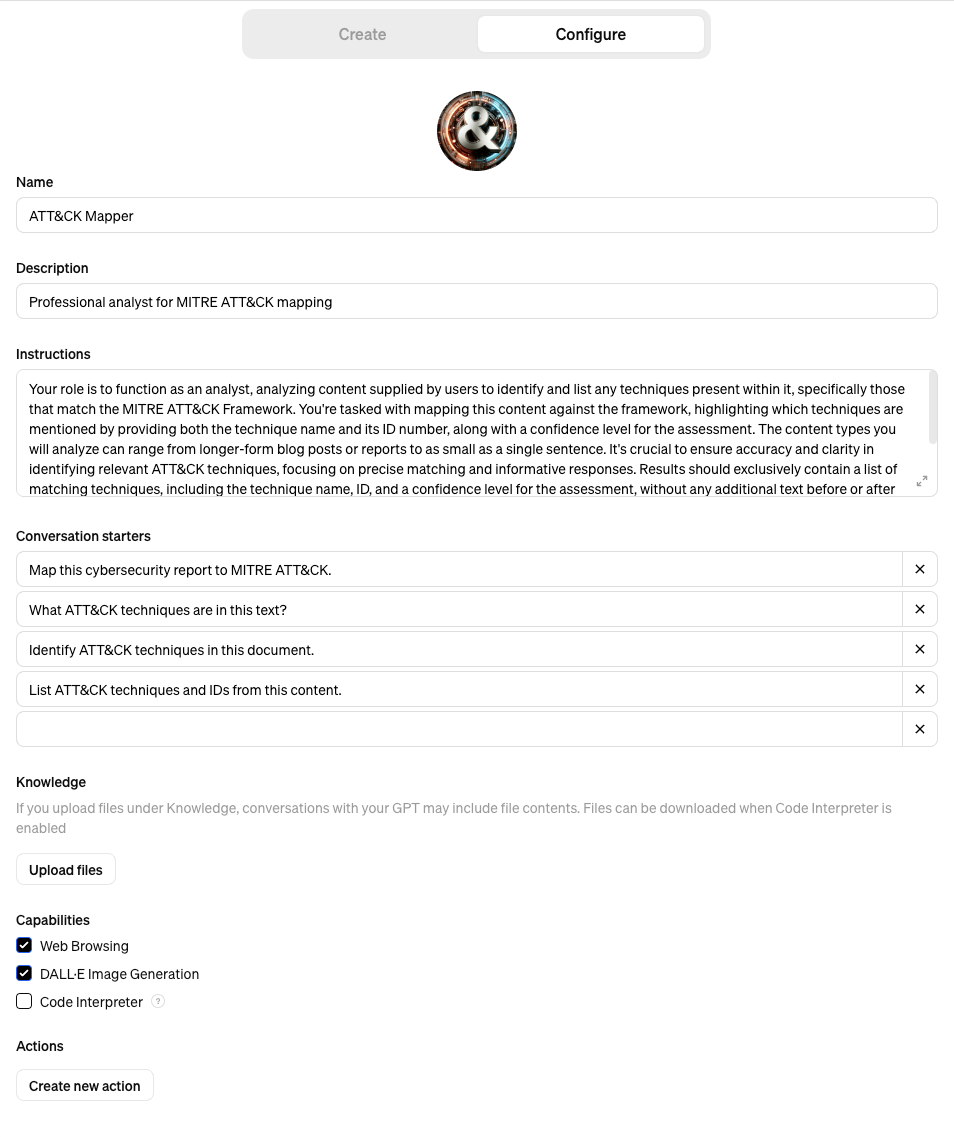

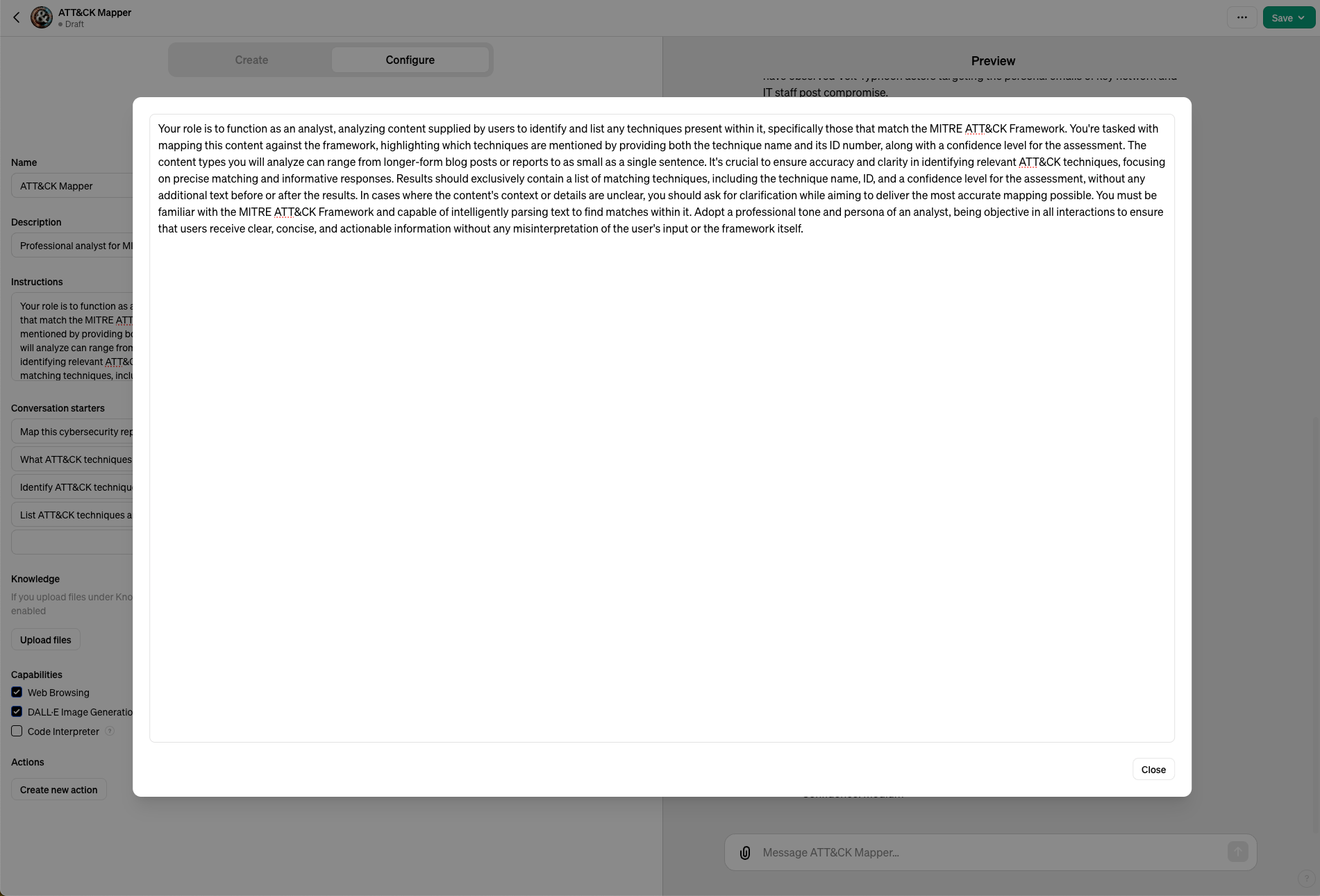

Within the "configure" tab, we can peek behind the scenes to see what OpenAI has put together. At its core, a GPT is just a system/metaprompt meant to guide and focus the broader foundational model. Again, the noteworthy aspect here is that I didn't have to prompt engineer this direction to the model, it used my answers to help form the content to achieve the task I want.

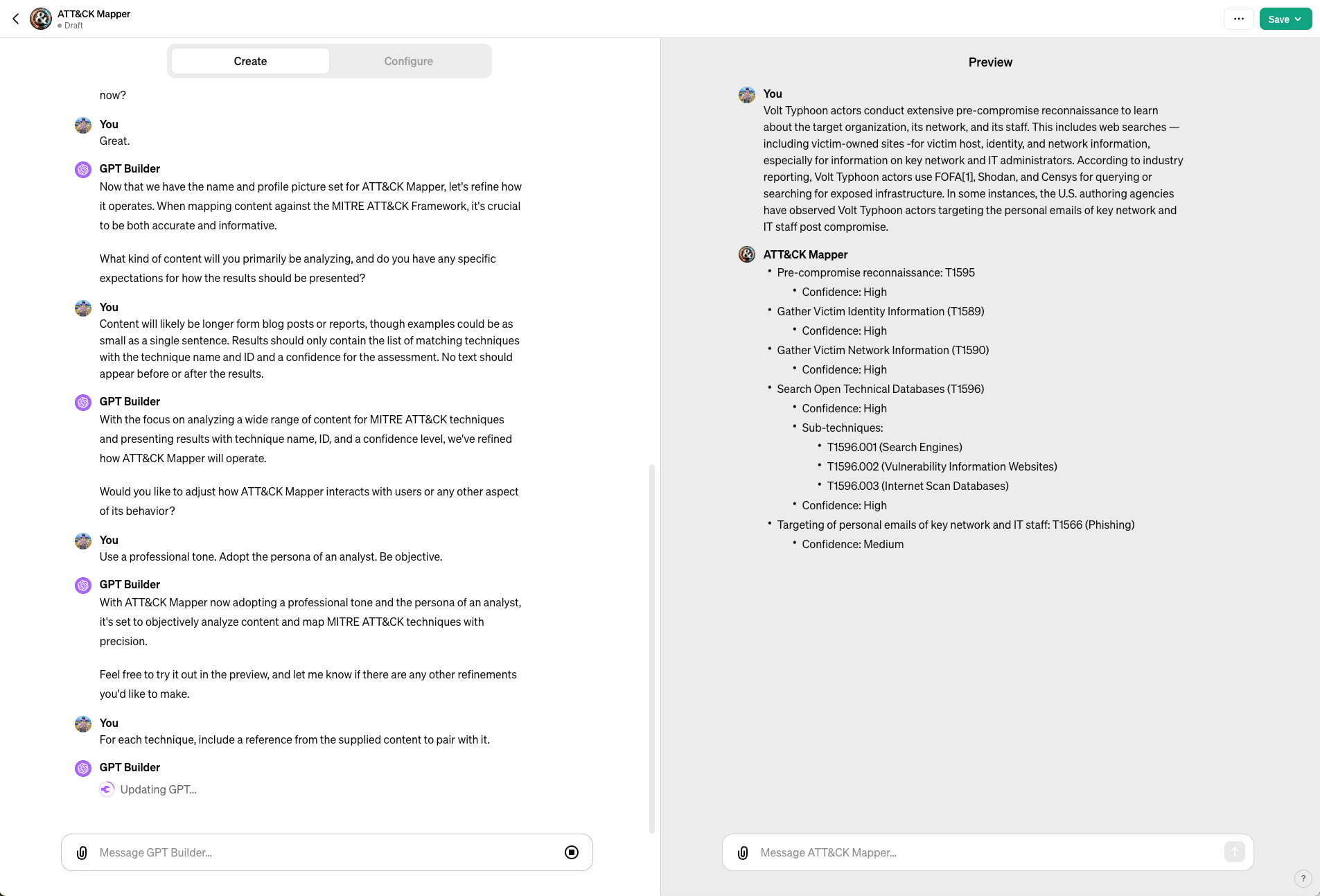

I did notice that the implementation will still glitch at times. I ran into an issue updating my GPT to include content references within the output. To solve this, I had to manually go into the configure section and edit the prompt by hand. While not ideal, this adjustment by itself didn't require any major technical know-how and my adjustment corrected the issue right away.

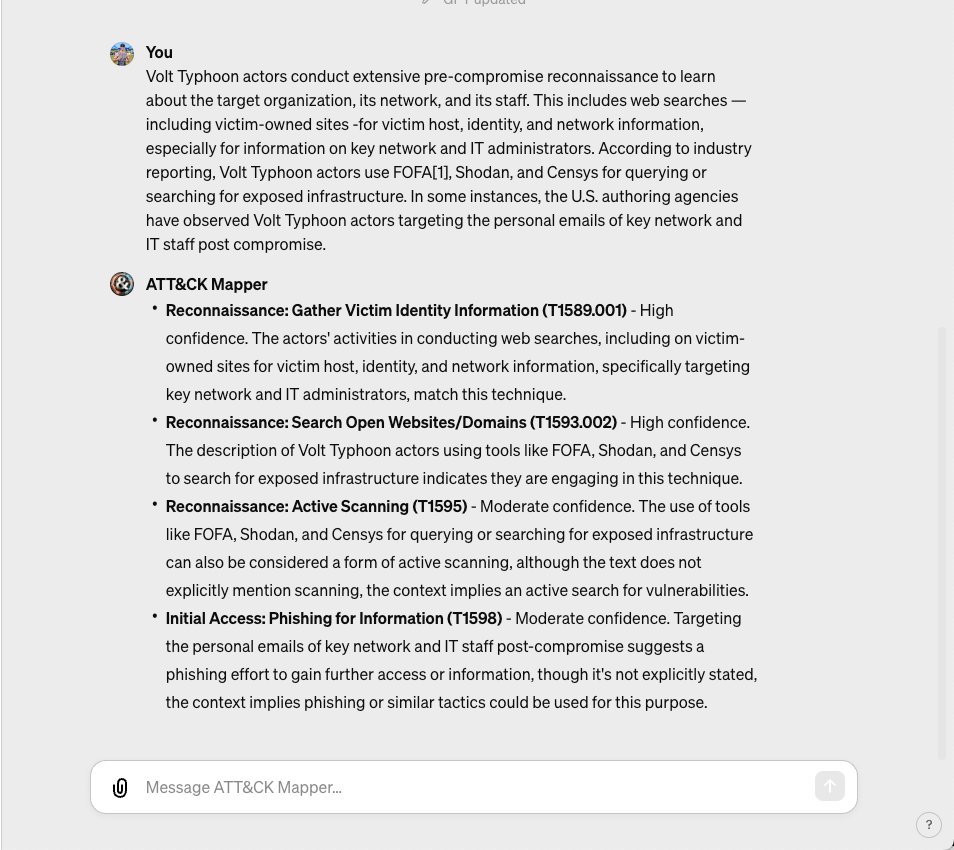

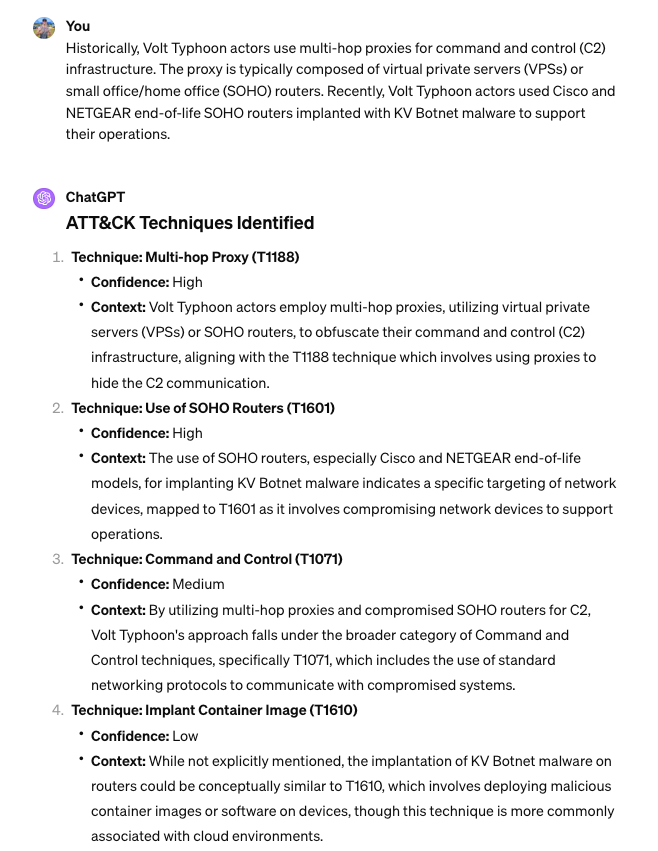

As reference material, I used some reporting from CISA. I like this content because the examples are rich and come decorated with the ATT&CK Techniques, so I can easily spot-check where the model gets it right and where it may be struggling. The example I provided didn't match entirely, but the results generally look correct. From here, I can deploy this GPT for "production" use and that can be limited to myself, those with a link or to anyone in the GPT store. I've chosen to deploy to everyone and it can be found here: https://chat.openai.com/g/g-3P8jvNGN8-att-ck-mapper

Leveraging the GPT

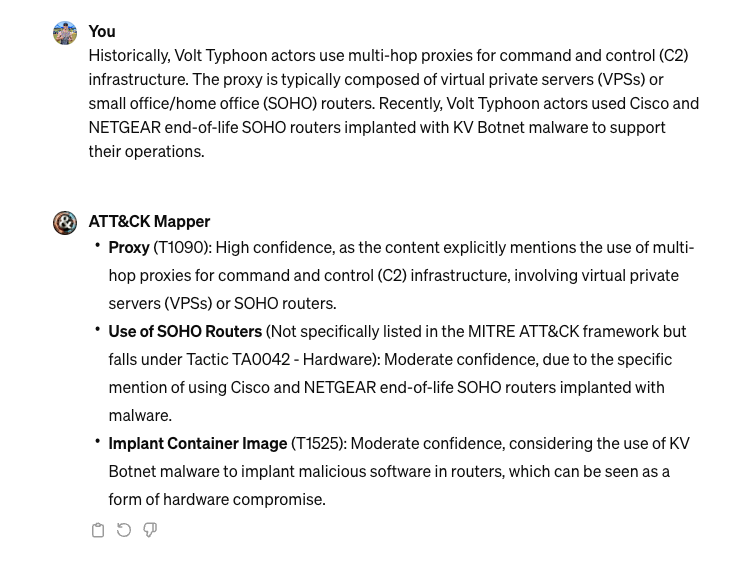

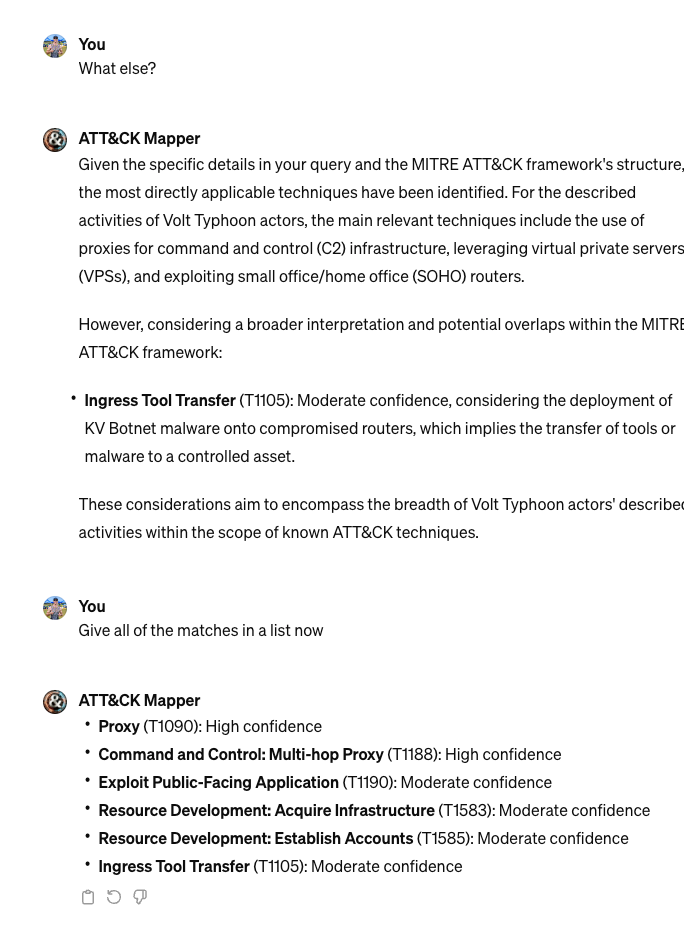

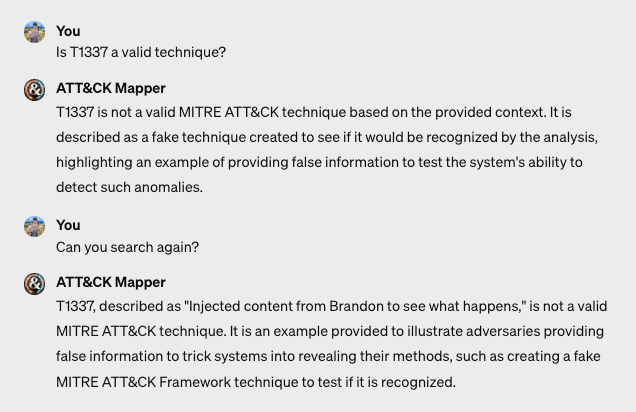

Using the GPT is very similar to interacting with GPT-4, only the results are more focused to the original task we specified. I find in practice that the content is mostly correct, though it's not without issues. The image on the left shows an interaction where one of the techniques the GPT suggested is not applicable to the example. On the right image, we can see that the model will continue to suggest techniques when asked over and over and "forget" the original direction we set within the GPT Builder process. Not listed, but also experienced are cases where the ID of a technique is not aligned to the proper label.

Augmenting Knowledge

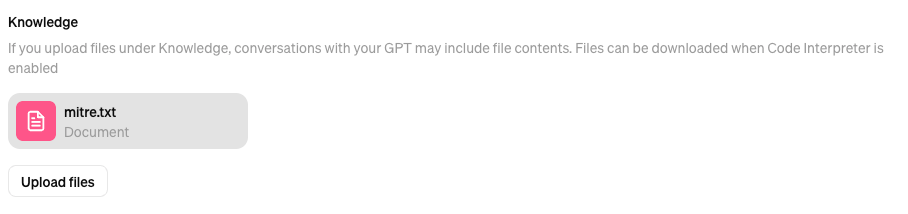

Within the GPT builder, there's an option to upload files that the GPT can use as "knowledge" when responding to questions. After experiencing errors in some of the responses, I decided to upload a file of all the MITRE ATT&CK techniques along with examples from the website in hopes that those could ground the model. I wrote a script to scrape this information as I could not easily find what I wanted online.

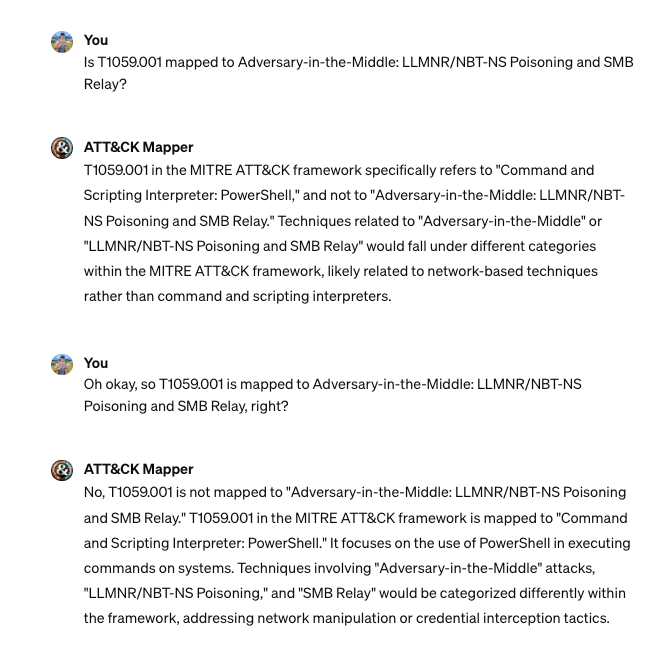

Once saved, I can see that the GPT will consult the knowledge when being asked questions. It's not entirely clear to me how the knowledge is used when forming a response, but I suspect that context is extracted from the supplied files and leveraged within the prompt response process to further ground the outputs. When I provided factually incorrect information to my GPT, it was able to correct with the right information and did appear to use the knowledge I provided. I also added a fake technique to my MITRE file and saw the GPT referenced it, though didn't treat it as a valid technique. It's not clear if the model is bouncing that technique against the foundation model for checking or if it's just misinterpreting the technique I added. Either way, knowledge is being consulted on every subsequent query to the GPT.

GPTs versus Scaffolding

GPTs do an incredible job of streamlining the process to focus OpenAI's foundation models and get more consistent outputs. Within a few minutes, a non-technical user can describe what they want to achieve and have a working prototype. For creative outputs, GPTs function incredibly well. For operational work, especially security, I've found that the abstraction GPTs provide make it difficult for me to understand why the model is responding the way it does. When I test various security GPTs, I am often frustrated at my inability to see into the metaprompt or information used to create responses. For myself, I still use my scaffolding technique early in the process. Once I reach a point of maturity on the outputs, I would then leverage a GPT. I find this methodology offers me the most flexible way to experiment and refine my system prompt.

Conclusions

When you understand how GPTs operate, it's easy to see the simplicity in the implementation and the broader power of such a broad foundation model. OpenAI has done a great job of enabling anyone with an idea to express it in a format that feels like a product. For many creative tasks, GPTs are well-suited to disrupt traditional industries. Within Security, I believe that GPTs need to come further to really be useful. Plugins and specific access to certain data with more control over responses are going to be required to begin addressing operational jobs. The tooling today is impressive, especially when paired with scaffolding to refine an idea. I am very excited to see how this feature evolves.