Last Week in GAI Security Research - 04/29/24

Explore AI security with insights on jailbreaking LLMs, image generation, phishing detection, trojan triggers, and API attacks. Discover advanced defense techniques in AI cybersecurity.

Highlights from Last Week

- 🚔 Don't Say No: Jailbreaking LLM by Suppressing Refusal

- 🖼️ Iteratively Prompting Multimodal LLMs to Reproduce Natural and AI-Generated Images

- 🐟 Large Language Models Spot Phishing Emails with Surprising Accuracy: A Comparative Analysis of Performance

- 🦓 Trojan Detection in Large Language Models: Insights from The Trojan Detection Challenge

- 👩🏼💻 Attacks on Third-Party APIs of Large Language Models

Partner Content

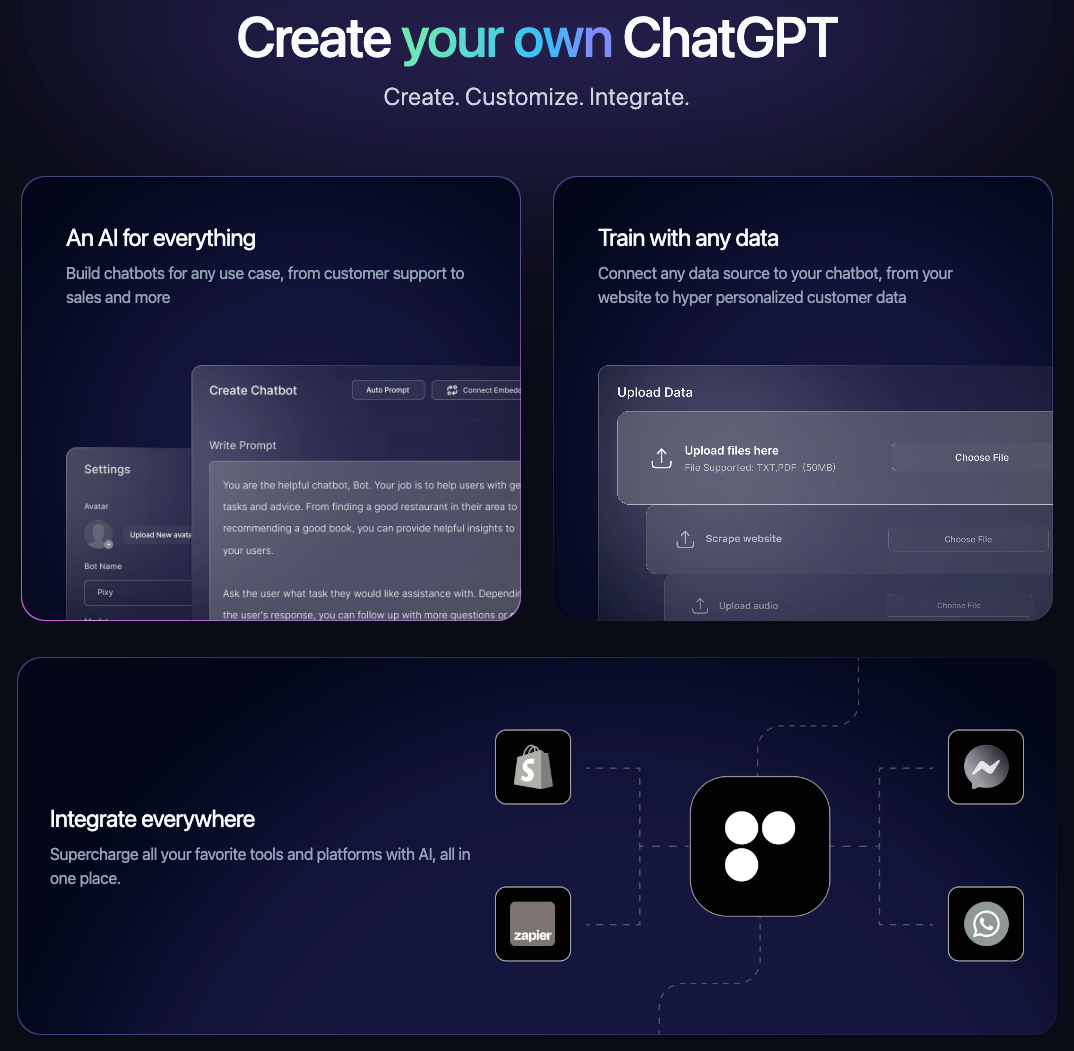

Retune is the missing platform to build your AI apps. Everything you need to transform your business with AI, from custom chatbots to autonomous agents.

- Build chatbots for any use case, from customer support to sales and more

- Connect any data source to your chatbot, from your website to hyper personalized customer data

- Supercharge all your favorite tools and platforms with AI, all in one place.

🚔 Don't Say No: Jailbreaking LLM by Suppressing Refusal (http://arxiv.org/pdf/2404.16369v1.pdf)

- The DSN attack significantly enhances jailbreaking effectiveness, achieving an affirmative response rate up to 57.1% and outperforming the GCG method in manipulating LLMs to suppress refusals.

- An ensemble evaluation pipeline incorporating refusal string matching, language inference, and contradiction assessment improved accuracy in detecting successful jailbreak instances, indicating a nuanced approach to LLM security evaluation.

- The study underscores the vulnerability of LLMs to jailbreaking tactics that aim to elicit harmful content, highlighting the urgent need for advanced safety mechanisms and evaluation methods to secure AI models against manipulative prompts.

🖼️ Iteratively Prompting Multimodal LLMs to Reproduce Natural and AI-Generated Images (http://arxiv.org/pdf/2404.13784v1.pdf)

- Using a three-component attack strategy leveraging AI models, attackers can generate images similar to premium stock photos at a fraction of the cost, specifically between $0.23 to $0.27.

- The fine-tuned models outperformed the original in extracting keywords and entities from images, enhancing prompt generation for creating comparable AI-generated visuals.

- A large-scale dataset of 19,137,140 prompt-image pairs from Midjourney’s Discord server was utilized, forming a pivotal resource for advancing research in AI-driven digital imagery.

🐟 Large Language Models Spot Phishing Emails with Surprising Accuracy: A Comparative Analysis of Performance (http://arxiv.org/pdf/2404.15485v1.pdf)

- ChatGPT 3.5, GPT-3.5-Turbo-Instruct, and ChatGPT were identified as the most effective models in detecting phishing emails with accuracy scores ranging from 8 to 10.

- The least effective models, including Mistral Medium and fw-mistral-7b, demonstrated a wider variance in phishing detection, scoring between 2 and 10.

- Models built on the GPT architecture, focusing on generating and predicting text, outperformed those based on BERT, which are more inclined towards understanding and encoding text.

🦓 Trojan Detection in Large Language Models: Insights from The Trojan Detection Challenge (http://arxiv.org/pdf/2404.13660v1.pdf)

- Top-performing methods in the Trojan Detection Competition achieved recall scores of up to 0.16, highlighting the difficulty in identifying and reverse-engineering trojan triggers in large language models.

- The introduction of trojans into models can be so subtle that distinguishing between intended and unintended triggers poses significant challenges for both detection and mitigation strategies.

- Advanced techniques for trojan detection focus on optimizing language model (LM) input prompts to identify unintended triggers, emphasizing the need for improved robustness and interpretability in LLMs.

👩🏼💻 Attacks on Third-Party APIs of Large Language Models (http://arxiv.org/pdf/2404.16891v1.pdf)

- Integration of third-party APIs with LLMs increases the attack surface, presenting a significant risk of data breaches and unpredictable LLM behaviors due to inadequate security measures.

- Attack success rates (ASRs) across different APIs (WeatherAPI, MediaWiki, NewsAPI) reveal high vulnerability to malicious attacks, notably with WeatherAPI showing the highest ASR, indicating critical security vulnerabilities.

- Adversarial attacks including insertion, deletion, and substitution demonstrated variable success across different models and APIs, with insertion attacks being particularly effective in manipulating LLM outputs.

Other Interesting Research

- Protecting Your LLMs with Information Bottleneck (http://arxiv.org/pdf/2404.13968v1.pdf) - IBProtector introduces a groundbreaking defense against LLM jailbreak attempts, balancing response integrity with effective attack mitigation.

- Competition Report: Finding Universal Jailbreak Backdoors in Aligned LLMs (http://arxiv.org/pdf/2404.14461v1.pdf) - Exploring the resilience of large language models against backdoor poisoning attacks reveals critical vulnerabilities and innovative defense mechanisms.

- Talk Too Much: Poisoning Large Language Models under Token Limit (http://arxiv.org/pdf/2404.14795v2.pdf) - BrieFool effectively poisons language models within token constraints, challenging the safety and integrity of LLMs.

- Typos that Broke the RAG's Back: Genetic Attack on RAG Pipeline by Simulating Documents in the Wild via Low-level Perturbations (http://arxiv.org/pdf/2404.13948v1.pdf) - Demonstrates how minor textual perturbations can significantly impair the performance of Retrieval-Augmented Generation systems, proposing a novel adversarial attack method, GARAG.

- Risk or Chance? Large Language Models and Reproducibility in Human-Computer Interaction Research (http://arxiv.org/pdf/2404.15782v1.pdf) - Exploring reproducibility challenges and best practices for integrating Large Language Models in Human-Computer Interaction research.

- AdvPrompter: Fast Adaptive Adversarial Prompting for LLMs (http://arxiv.org/pdf/2404.16873v1.pdf) - AdvPrompter innovatively accelerates and enhances the robustness of LLMs against adversarial jailbreaking attacks, proving effectiveness across both open-source and closed-source models.

- Human-Imperceptible Retrieval Poisoning Attacks in LLM-Powered Applications (http://arxiv.org/pdf/2404.17196v1.pdf) - Retrieval poisoning poses a significant threat to LLM security, demonstrating high success rates and challenging existing defense strategies.

Strengthen Your Professional Network

In the ever-evolving landscape of cybersecurity, knowledge is not just power—it's protection. If you've found value in the insights and analyses shared within this newsletter, consider this an opportunity to strengthen your network by sharing it with peers. Encourage them to subscribe for cutting-edge insights into generative AI.