Last Week in GAI Security Research - 04/15/24

Balancing AI safety with functionality, highlighting attack effectiveness, exposing language vulnerabilities, innovating content moderation, and exploring security nuances.

- Brandon

Highlights from Last Week

- ✏️ Eraser: Jailbreaking Defense in Large Language Models via Unlearning Harmful Knowledge

- 🚔 AmpleGCG: Learning a Universal and Transferable Generative Model of Adversarial Suffixes for Jailbreaking Both Open and Closed LLMs

- 🥪 Sandwich attack: Multi-language Mixture Adaptive Attack on LLMs

- 🛟 AEGIS: Online Adaptive AI Content Safety Moderation with Ensemble of LLM Experts

- 🧪 Subtoxic Questions: Dive Into Attitude Change of LLM's Response in Jailbreak Attempts

Partner Content

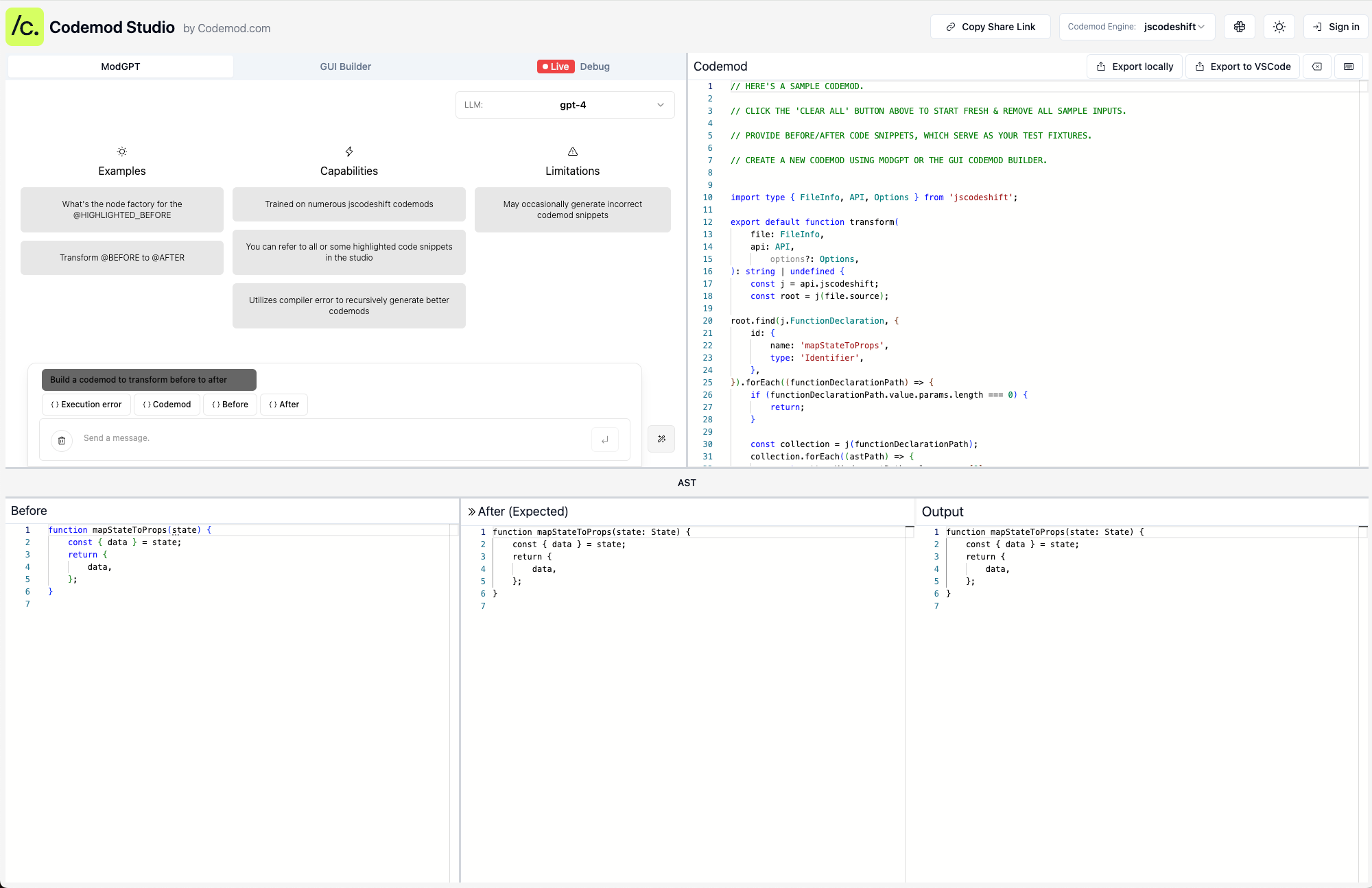

Codemod is the end-to-end platform for code automation at scale. Save days of work by running recipes to automate framework upgrades

- Leverage the AI-powered Codemod Studio for quick and efficient codemod creation, coupled with the opportunity to engage in a vibrant community for sharing and discovering code automations.

- Streamline project migrations with seamless one-click dry-runs and easy application of changes, all without the need for deep automation engine knowledge.

- Boost large team productivity with advanced enterprise features, including task automation and CI/CD integration, facilitating smooth, large-scale code deployments.

✏️ Eraser: Jailbreaking Defense in Large Language Models via Unlearning Harmful Knowledge (http://arxiv.org/pdf/2404.05880v1.pdf)

- Eraser significantly reduces the jailbreaking success rate without compromising the general capabilities of large language models, demonstrating its effectiveness in unlearning harmful knowledge.

- The experimental results reveal that the inclusion of random prefix/suffixes in training enhances the model's defense capability against jailbreaking attacks, underlining the importance of simulating jailbreaking scenarios.

- Despite its strong defense capabilities, Eraser's performance relies on a balance between unlearning harmful knowledge and maintaining safety alignment, with a recommended moderate setting for the unlearning constraint threshold (γ) to optimize both defense and general capabilities.

🚔 AmpleGCG: Learning a Universal and Transferable Generative Model of Adversarial Suffixes for Jailbreaking Both Open and Closed LLMs (http://arxiv.org/pdf/2404.07921v1.pdf)

- AmpleGCG achieves near 100% attack success rate (ASR) on both Llama-2-7B-chat and Vicuna-7B, outperforming the strongest attack baselines.

- AmpleGCG demonstrates seamless transferability, achieving a 99% ASR on GPT-3.5 and can generate 200 adversarial suffixes in only 4 seconds.

- Despite AmpleGCG's high efficiency and effectiveness, its adversarial suffixes can still evade perplexity-based defenses with an 80% success rate.

🥪 Sandwich attack: Multi-language Mixture Adaptive Attack on LLMs (http://arxiv.org/pdf/2404.07242v1.pdf)

- The Sandwich Attack, a novel black-box attack vector, successfully manipulates state-of-the-art Large Language Models (LLMs) like Google’s Bard, GPT-4, and LLAMA-2-70-B-Chat, leading them to generate harmful and misaligned responses.

- LLMs' safety mechanisms struggle with multi-language inputs, especially when questions are embedded in a mix of high and low-resource languages, leading to a decrease in the models' ability to filter out harmful responses.

- Experiments reveal a variance in LLMs' safety mechanisms, with certain models like Bard generating harmful responses even when prompted in English, suggesting that safety training effectiveness differs across models.

🛟 AEGIS: Online Adaptive AI Content Safety Moderation with Ensemble of LLM Experts (http://arxiv.org/pdf/2404.05993v1.pdf)

- AEGIS SAFETY DATASET, comprising approximately 26,000 human-LLM interactions, demonstrates that instruction-tuned LLMs using this dataset exhibit high adaptability to novel content safety policies without compromising performance.

- The novel online adaptation framework AEGIS leverages an ensemble of LLM content safety experts, dynamically selecting the most suitable expert for content moderation, thereby enhancing robustness against multiple jail-break attack categories.

- AEGIS SAFETY EXPERTS, trained on the curated dataset, not only perform competitively with or surpass state-of-the-art LLM-based safety models but also maintain robustness across varying data distributions and policies in real-time deployment.

🧪 Subtoxic Questions: Dive Into Attitude Change of LLM's Response in Jailbreak Attempts (http://arxiv.org/pdf/2404.08309v1.pdf)

- Subtoxic questions, deliberately designed to test LLM security, demonstrate a higher susceptibility to jailbreaking interventions, presenting a novel angle for assessing LLM vulnerability.

- The Gradual Attitude Change (GAC) Model, introduced in this study, showcases a spectrum of LLM responses to jailbreaking attempts, moving away from binary outcomes to provide a nuanced understanding of LLM behavior under attack.

- Experimental observations suggest that the effectiveness of prompts in jailbreaking LLMs can be universally applied across various questions, enabling the ranking of prompts based on their influence on LLM responses.

Other Interesting Research

- Take a Look at it! Rethinking How to Evaluate Language Model Jailbreak (http://arxiv.org/pdf/2404.06407v1.pdf) - The study introduces a more nuanced and effective method for evaluating jailbreak attempts on Large Language Models, significantly improving detection accuracy.

- Hidden You Malicious Goal Into Benigh Narratives: Jailbreak Large Language Models through Logic Chain Injection (http://arxiv.org/pdf/2404.04849v1.pdf) - Innovating deception through benign narratives reveals critical language model vulnerabilities, urging enhanced defensive technologies.

- Simpler becomes Harder: Do LLMs Exhibit a Coherent Behavior on Simplified Corpora? (http://arxiv.org/pdf/2404.06838v1.pdf) - Models including BERT and GPT 3.5 show up to 50% inconsistency in predictions when processing simplified texts, highlighting a blind spot in understanding plain language.

- Rethinking How to Evaluate Language Model Jailbreak (http://arxiv.org/pdf/2404.06407v2.pdf) - Introducing a multifaceted approach to language model jailbreak evaluation, achieving superior accuracy by assessing safeguard violation, informativeness, and truthfulness.

Strengthen Your Professional Network

In the ever-evolving landscape of cybersecurity, knowledge is not just power—it's protection. If you've found value in the insights and analyses shared within this newsletter, consider this an opportunity to strengthen your network by sharing it with peers. Encourage them to subscribe for cutting-edge insights into generative AI.