Hire an AI Strategist in Security

The current AI boom is unlike any other, moving at an unprecedented pace. It has been nearly two years since the launch of ChatGPT, and new advancements, features, and research papers continue to emerge almost daily. Many people I speak with—individuals and organizations—often feel overwhelmed by the amount of information being released and are unsure what they should be focusing on to stay up to date. This is why I believe enterprise organizations adopting generative AI should consider staffing a dedicated AI security strategist.

The primary purpose of this role is to stay informed on advancements in the space—specifically from a security perspective—and offer guidance across the organization. This individual partners with various teams to accelerate their understanding of AI and provide clarity. They also help implement security measures that scale alongside AI adoption. By focusing less on day-to-day operational tasks, the role can better navigate the rapid evolution of AI, mitigate potential risks, and ensure that security measures grow to handle increased demands. This role bridges the gap between the excitement for AI adoption and the critical need to understand and manage its evolving security implications—a gap that many organizations acknowledge but struggle to address due to resource constraints.

In the past six months, I've operated in this role and it's been both fulfilling and highly impactful to the organization. I've been reluctant to write this post due to the self-serving nature, but when I speak with organizations and customers across the enterprise, they are eager to innovate with AI, but do so safely. I believe this sort of role, while unconventional, is a pathway worth considering.

Here are several workstreams someone in this role may perform with peers across the organization:

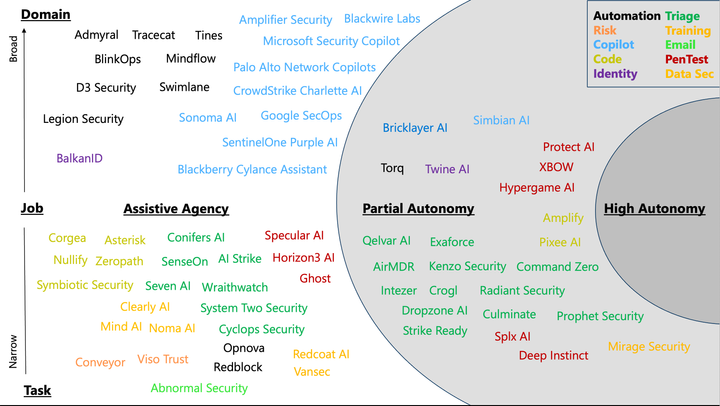

- Develop high-level frameworks to describe AI agents and assess their level of sophistication for security purposes like threat modeling.

- Conduct experiments using agent frameworks on security-related problems to evaluate how generative AI can scale security team operations.

- Analyze the external market for startups and novel AI implementations to inform product planning and identify opportunities to enhance internal security capabilities.

- Summarize and disseminate key findings from generative AI research papers focused on security issues to relevant teams, helping to shape strategies and perspectives.

- Identify vulnerabilities in language models and agentic systems, and assess how traditional security threats could be enhanced by these technologies.

- Analyze broader market trends in the generative AI space, such as advancements in open and small language models, and determine their applicability to address security challenges.

Here are skillsets that would be beneficial for someone in this role to have:

- Expertise in cybersecurity, with experience in threat modeling and security frameworks.

- Ability to conduct research and experiments on AI systems to evaluate security impacts.

- Strong market analysis skills to track AI advancements and identify relevant opportunities.

- Effective communication skills for summarizing complex findings and shaping strategic decisions.

- Collaboration skills to work across teams and implement scalable security measures for AI adoption.

If you work in security within a larger enterprise and want help defining this role internally, please reach out to me. I am more than happy to share my experience and offer guidance.