Automating Incident Triage with Copilot for Security

When speaking with Copilot for Security customers, automation is often brought up as a topic of exploration. Customers are eager to extend their existing SOAR investments or workflows to include Copilot because they recognize the capabilities this new technology brings and believe it has the potential to further increase productivity.

Today, Copilot for Security offers two ways of performing automations: 1) Promptbooks which are prompts chained together to achieve a specific task and 2) a LogicApp Connector to fuse the power of Copilot for Security directly into your workflows. In this post, we will explore how the LogicApp connector and set of capabilities could be leveraged to triage an incident––a common action taken by nearly every Security Operations Center (SOC).

Note: This post builds on the original release blog of the connector where a phishing email analysis was performed.

Video Demonstration

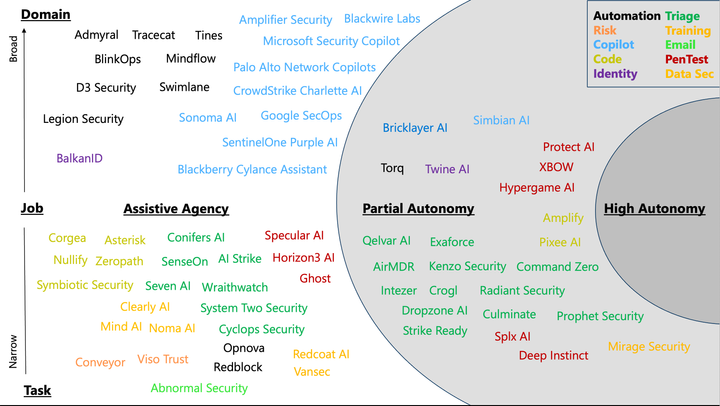

(SIEM + SOAR + GAI) = Next-Gen Automation

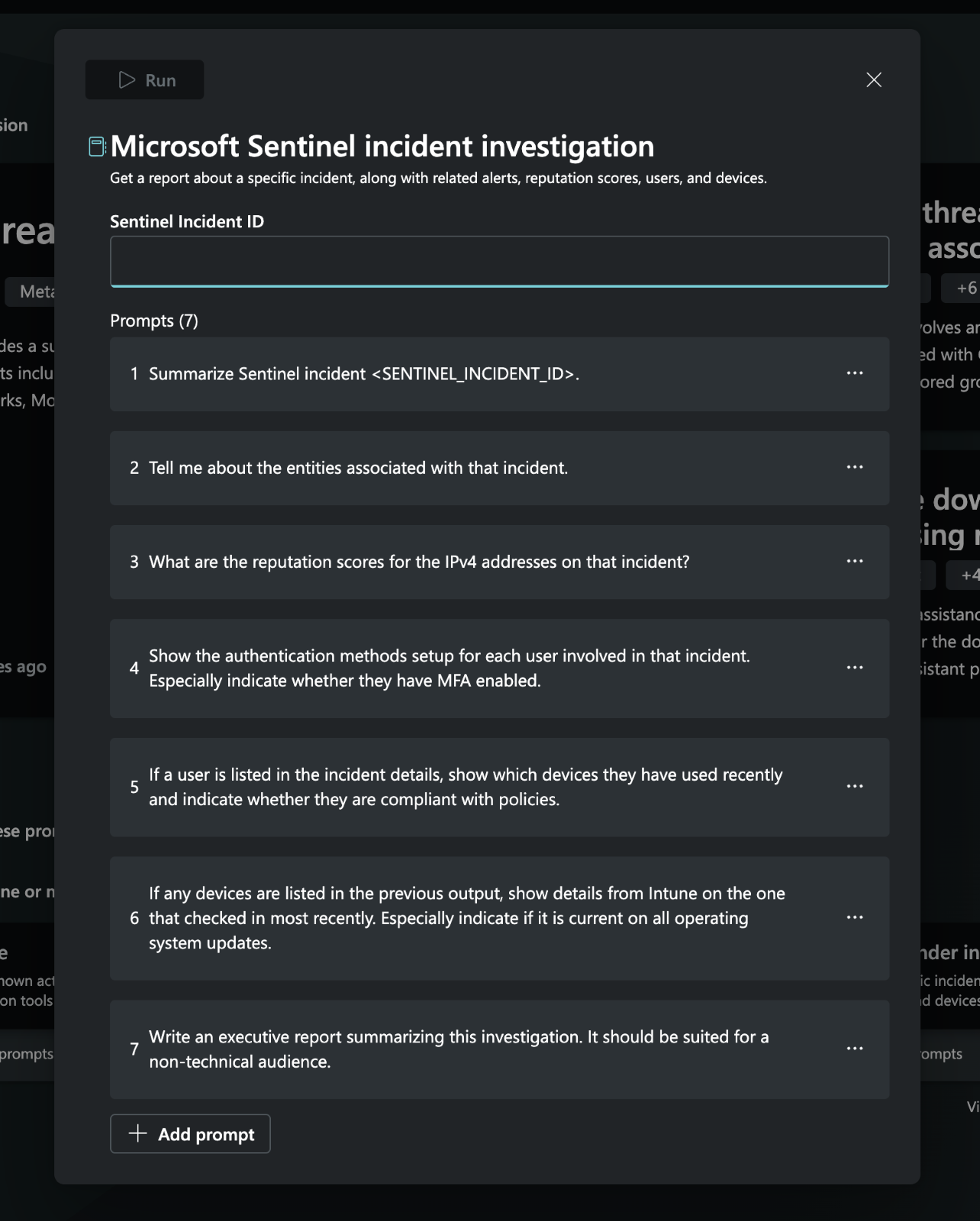

For this demonstration, I am going to use Microsoft Sentinel (SIEM) which includes access to LogicApps through the Automations and Playbook (SOAR) capabilities, and Copilot for Security (GAI). Included in the product are a set of curated Microsoft Promptbooks including one to triage a Sentinel incident. Running this within the standalone experience will give us a rough sense of what to expect and confidence we can emulate it within a LogicApp using our connector.

While this workflow does not touch on every aspect of incident triage, it provides a good foundation to operate from. Specifically, this logic will summarize the incident, collect any reputation data for a subset of indicators, identify authentication methods of identities impacted, list devices associated with those identities and their compliance status and write an executive report. I am going to keep the core prompts and extend a few to apply more specifically to Sentinel once within the playbook.

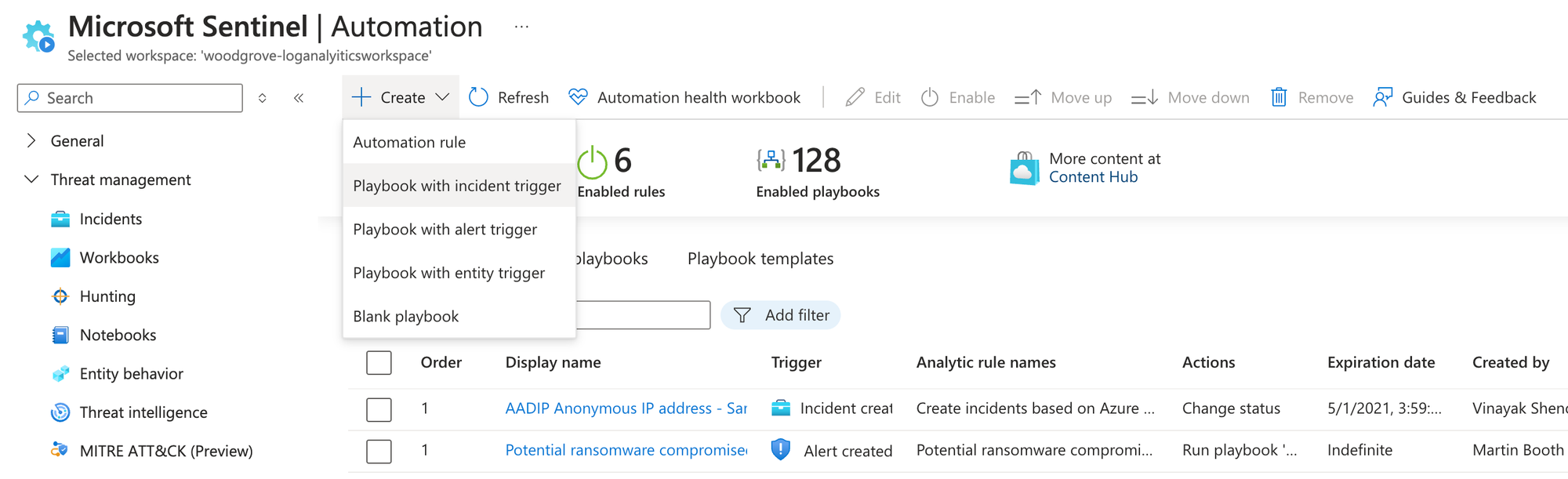

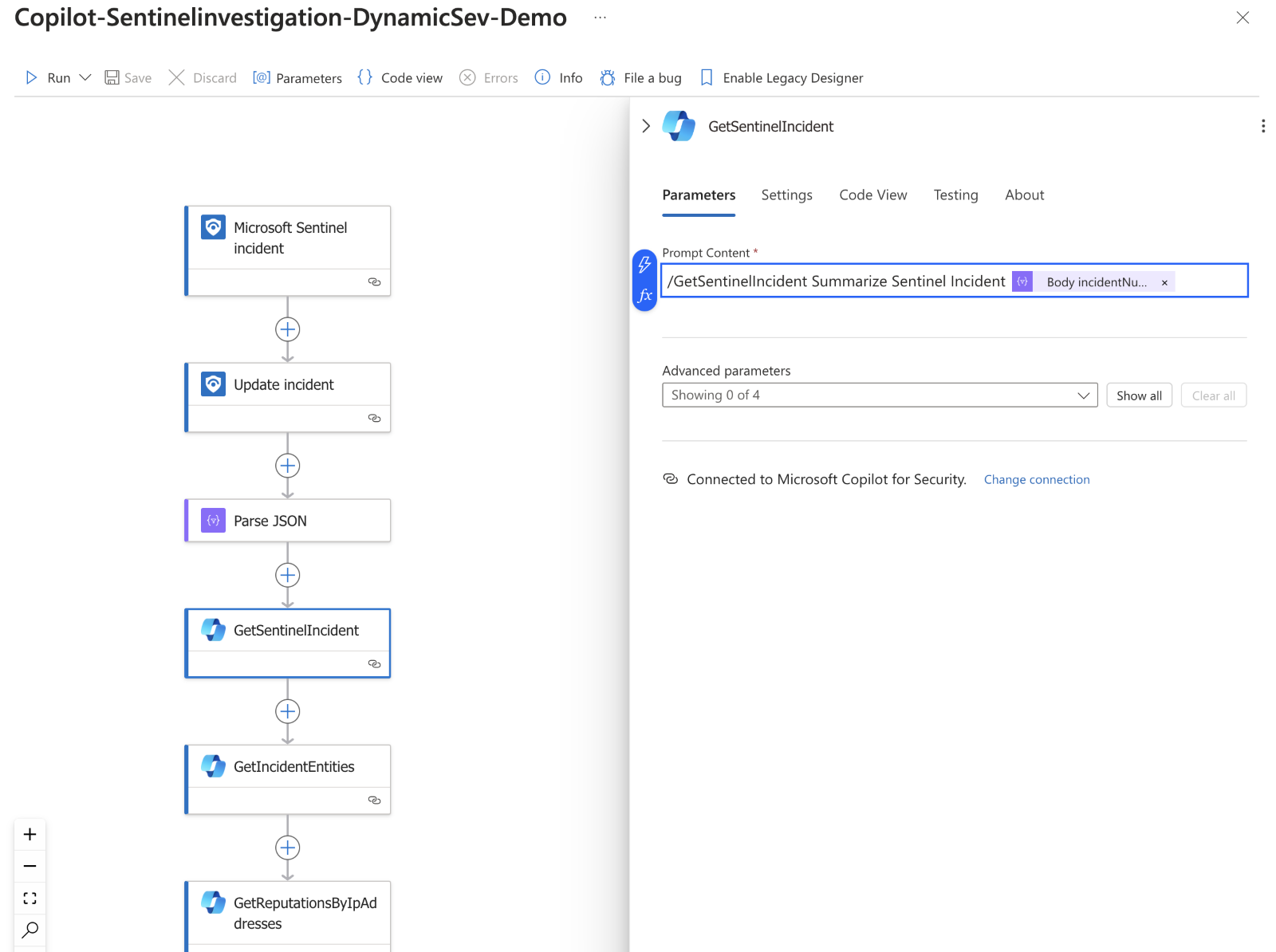

Within Sentinel, I can create a playbook from an incident trigger in the "Automations" section of the product.

Once setup, I can leverage the low-code/no-code editor to input my workflow. I've mimicked much of the promptbook using the Copilot for Security connector. Each step contains the prompt I plan to run and any context from the incident. Like the promptbooks, Copilot for Security will create a session for this playbook, so each prompt gets the benefit of the broader session context and is stored within the product for later analysis or reasoning.

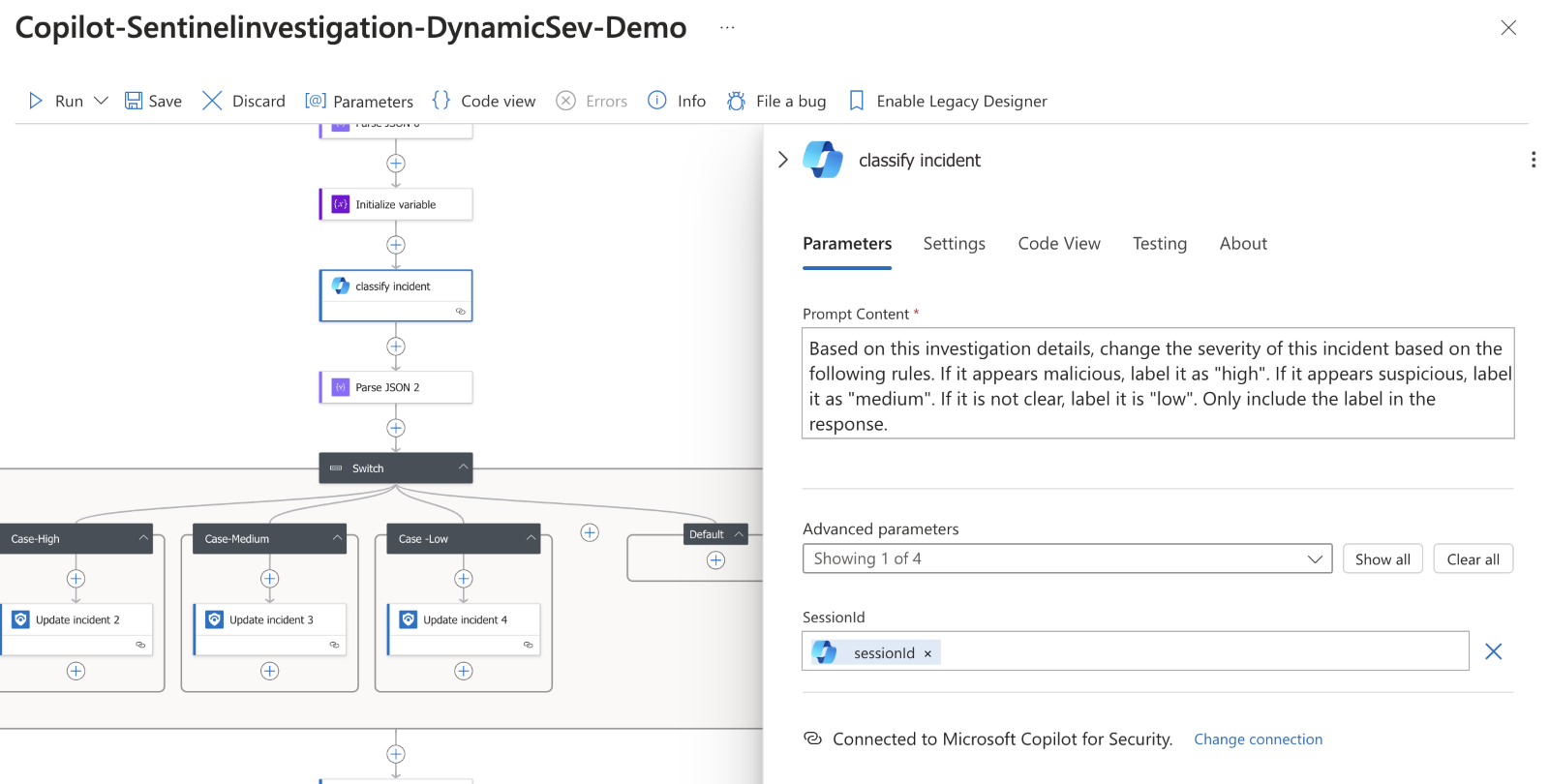

Each of my prompts help to answer a common question an analyst may pose, but I still need to bring this information back into Sentinel. LogicApps offer a Sentinel connector that can be used to perform actions on our original incident. Here, I get creative in a few ways using generative AI. First, I leverage the session information and have Copilot attempt to classify the incident as "high", "medium" or "low" based on all the information contained in the responses and force the model to return a label. This is fed into a switch statement which in turn updates the incident status and severity.

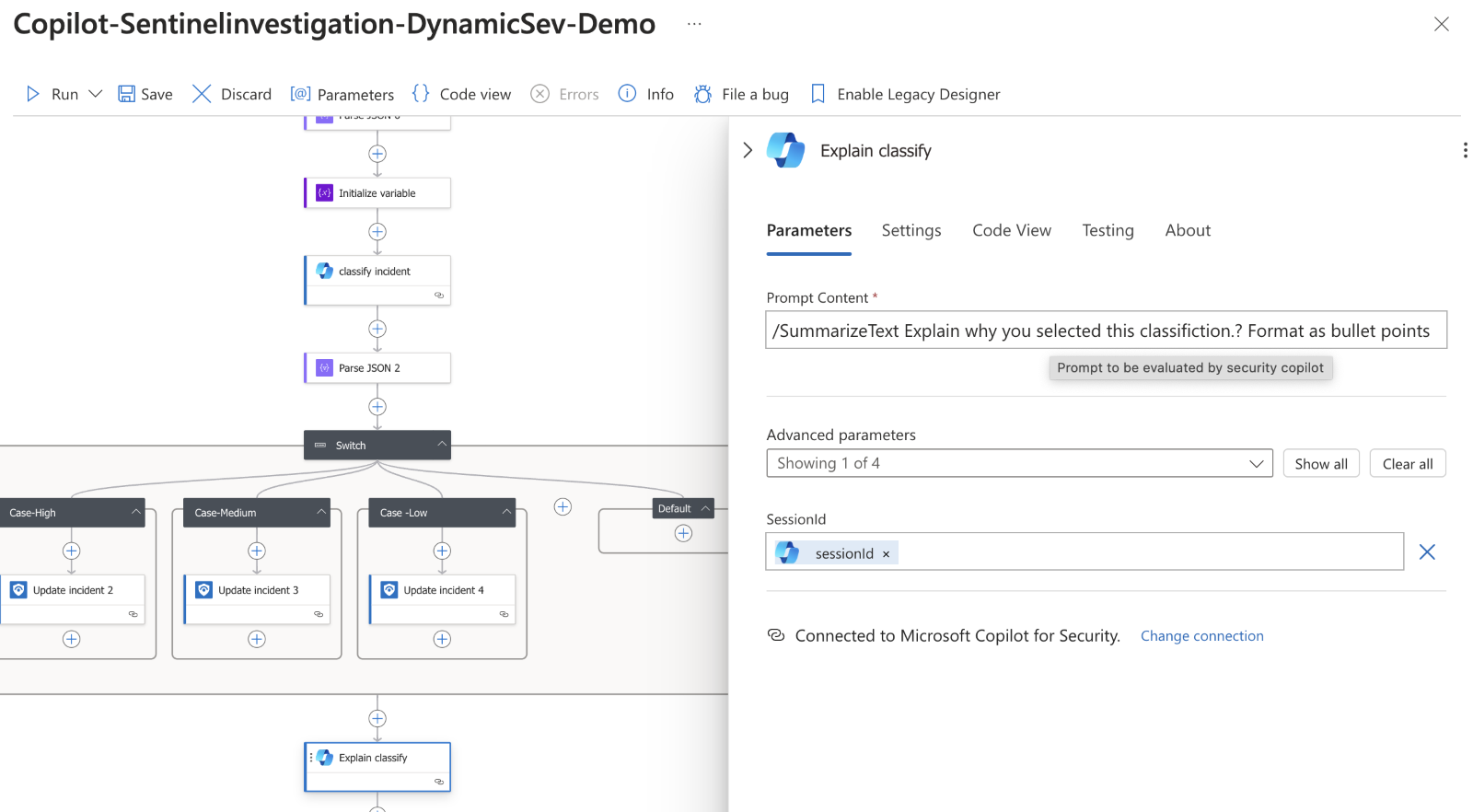

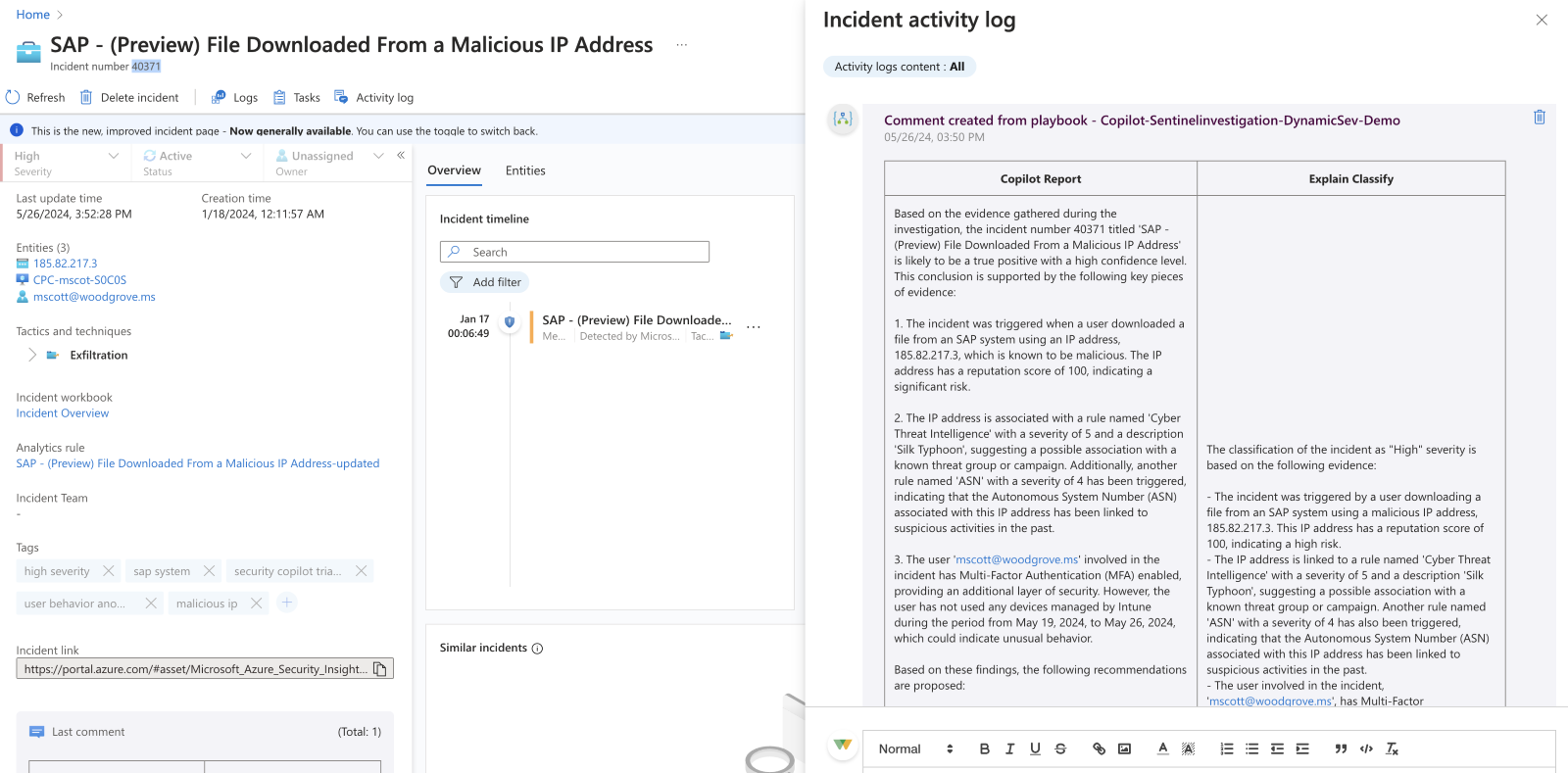

Next, I have Copilot for Security explain the reasoning behind the classification and output the data as a bullet point list. This output, paired with the session summary is used to create an HTML comment on the incident, giving an analyst a clear explanation of the steps that Copilot performed when triaging the incident and justification for the label.

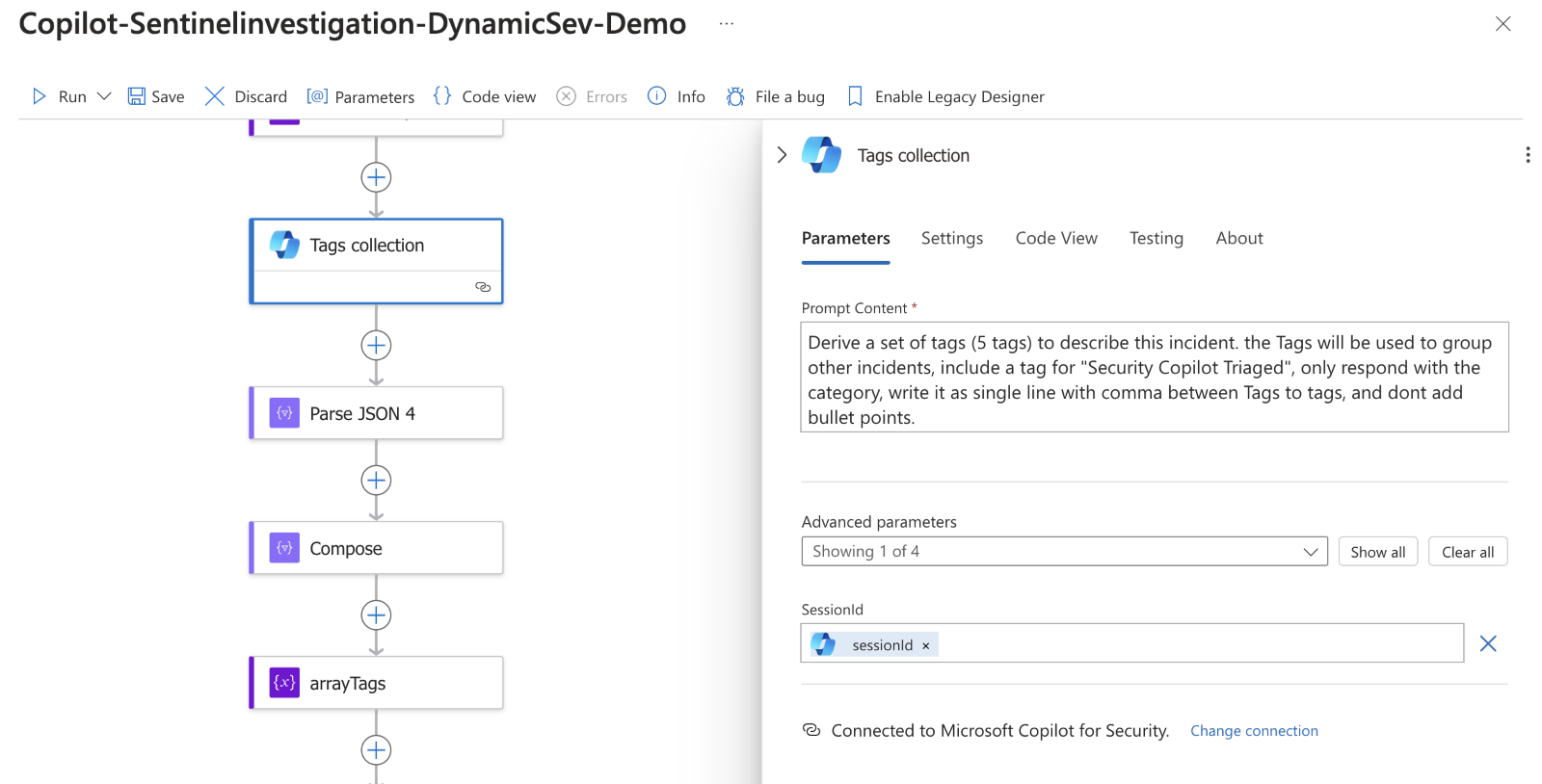

Finally, I have Copilot suggest tags for the incident based again on the session information. These are used to tag the incident, adding a dynamic categorization element.

This playbook is configured to run on every incident generated in my workspace automatically. Here's an example set of outputs where we can see the incident has been automatically classified as "high" severity, marked active, shows signs of a malicious IP and file download and includes the Copilot report as a comment. Naturally, there's room for improvement on some of the outputs, but this can easily be done through basic prompt tuning.

Augmenting the Security Organization

At the end of last year, I briefly explored how SOAR could benefit from GAI. Notably, I called out natural language as processing instructions, influenced decision making, dynamic content and better human-in-the-loop features. This demonstration of triaging an incident hit on a lot of these categories:

- Natural language questions to be answered about the incident, bridging multiple products and data sources.

- Natural language responses summarized and "reasoned" over.

- Dynamic content created in the form of a classification, tags and summary of the investigation performed.

- Influenced decision making by using the model to suggest the severity based on the session content.

- Better human-in-the-loop for the fact that this runs on every incident before an analyst needs to be involved.

Functionality like this will augment how security teams run their SOCs, especially as foundation models increase in their accuracy and capabilities. Imagine a world where Copilots are triaging every incident in full then using that information to inform a dynamic prioritization process in real-time. Incidents with clear evidence and decision-making data are automatically actioned and closed whereas ones requiring expert consultation are put into a Teams channel via a series of natural language questions posed by the model and answered by the analyst. In this new SOC, defenders are afforded more time to do more engaging and complex work to protect the organization.

Parting Thoughts

We are living in exciting times in security and IT operations. Generative AI is still rapidly forming and new discoveries are constantly being shared. I strongly encourage every professional and customer I speak with to explore this space, perform experiments and try out new ideas. The Copilot for Security team is constantly looking for new use cases and user feedback. This demonstration of triaging an incident is just one of many workflows we are working on and you should expect a whole lot more!