Agents, clarified.

In recent months, I've observed "agents" and "agentic" terms supplanting generic concepts like "language models" and "generative AI". Overall, this is a net-positive for the industry as it acknowledges that single-turn, stateless language models alone are not enough to solve workflows and that we need components wrapped around these models to make them truly useful. Unfortunately, trading one monolithic term for another doesn't provide the clarity needed required to make informed decisions about these new forms of applications. In this post, I am going to share what I think makes up an agent and how I evaluate implementations I see in Microsoft experiments and external startups.

Grounding and Alignment

Before diving into agent components and dimensions, it's important to have the basic definitions covered. I have yet to see consensus form around these terms, but this is how I like to refer to them when speaking about agents.

- Language models are AI systems trained on extensive text datasets to understand, generate, and manipulate human language, but their interactions are not stateful, losing context once an answer is generated.

- Single-agent architectures are language model-powered systems that use code, data, and user interfaces to plan and achieve goals, varying in autonomy from assistants to fully autonomous, and can be chatbots, recommendation systems, or personal assistants for tasks, jobs, or general purposes.

- Multi-agent architectures integrate multiple agents to achieve complex goals in dynamic environments, coordinating behavior, managing interactions, and capturing emergent learnings

- Agentic Workflow/Patterns are systems that use single or multi-agent components to achieve an outcome.

Agent Components

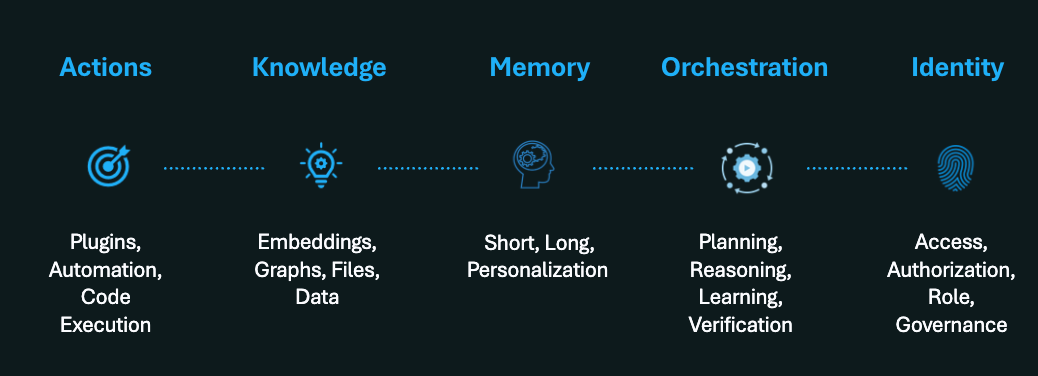

From my perspective, agents are made-up from five components which include actions, knowledge, memory, orchestration and identity. These build and refine on the original work of Andrew Ng.

- Actions. Agents can perform actions on behalf of a human. To do this, they require tools in the form of plugins, workflows, code execution and ways to connect to existing systems.

- Knowledge. Agents understand the world through embeddings, data structures, files, and their pre-training. Knowledge is leveraged to inform plan development and completing specific tasks.

- Memory. Agents require memory to assist in completing short and long-form tasks. Memory also assists in the formation of knowledge and learnings an agent can apply in the future.

- Orchestration. Agents plan and reason to accomplish the goals they are given. Orchestration aids in bringing together all the components of an agent to perform a given task.

- Identity. Agents operate with their own identities as they are not quite services nor are they human employees of an organization.

By having these components outlined, I can shift away from the word "agent" and focus on a given area that makes up the agent. For example, when considering how agent/agentic systems could be attacked, I can leverage the components as a high-level structure to organize all the risks and proposed solutions. These components also allow for me to model experiments based on specific components. For example, knowledge can be stored in all sorts of ways from embeddings to text-indexes to graph structures and more. By segmenting just that component, I can begin forming experiments across all the different configuration patterns to identify which method works best for a specific workflow.

While these components offer structure, more work still needs to be done to further enumerate the different patterns, implementations and sub-components. The more detailed we can get, the better we can be in modeling risk and measuring success in experiments formed.

Agent Dimensions

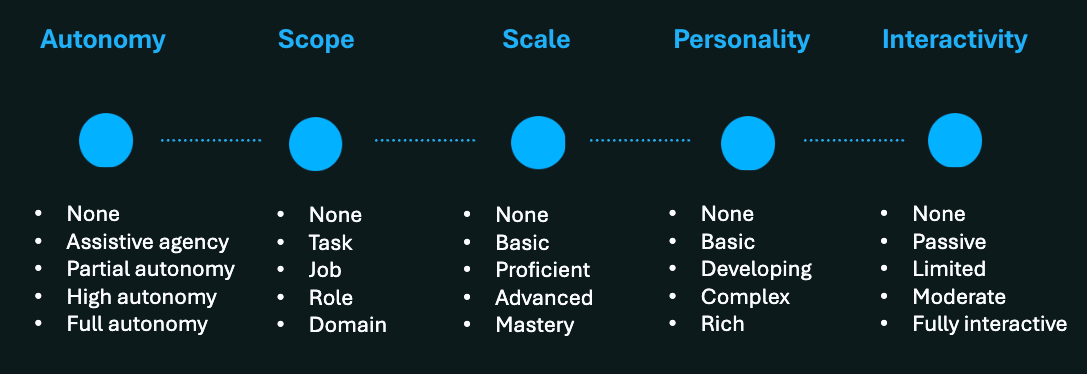

Agent components give us more nuance to how we talk about agents, but alone, they aren't enough to measure sophistication or a given implementation. For that, I use the dimensions of autonomy, scope, scale, personality and interactivity.

- Autonomy. Defines how much a human needs to be involved versus a machine. The higher the level, the more sophisticated the agent and greater freedom it has to operate without humans in the loop.

- Scope. Defines the scope of work the agent is defined to complete. As agents become more capable, they get closer to generally completing any work within a given domain.

- Scale. Defines how much experience the agent has relative to the scope they are executing within. This is influenced by technology and pricing/packaging. For example, basic might map to fast responses from GPT-4o whereas advanced may take longer to process and use GPT o1.

- Personality. Defines whether the agent takes on a personality and how that manifest. For personal assistants, you will likely want a rich personality, but for agents performing operational work, you may want no personality at all.

- Interactivity. Defines how much the agent may be part of a user's experience. Agents with no interaction will operate without ever being seen, though their actions may be directly displayed to the user. Agents with full interactivity could take on human-like forms.

To illustrate the utility of these dimensions, I have two agent descriptions below.

- Background Data Processor The Background Data Processor functions with Assistive Agency, assisting with data processing tasks but requiring human oversight for final validation. It is scoped to handle individual Tasks that contribute to larger projects. With Basic experience, it has essential knowledge of data processing and can handle straightforward data tasks. This agent has No personality, being neutral and purely functional, focusing solely on data processing without personal traits. It operates with No interaction (background), processing data entirely in the background without direct user interaction.

- Financial Analysis Assistant. The Financial Analysis Assistant has High Autonomy, capable of performing comprehensive financial analyses and generating reports with minimal human intervention. It operates within the Domain of the financial industry, handling various financial analysis tasks across different jobs. With Advanced experience, it possesses extensive and in-depth knowledge of financial analysis, able to tackle complex financial challenges. The assistant has a Developing personality, exhibiting more defined traits and moderate expression of preferences, making the interaction more engaging. It is Fully interactive, interacting directly with users to provide insights, answer questions, and offer detailed financial reports.

It should be clear that the background data processor is far less complex than the financial analysis assistant. What I like about these dimensions, especially when proposing and building agents in security, is that they offer a means to describe an implementation. Without this nuance, I find the quest is always to build the most capable, most advanced, most sophisticated solution to every problem. The dimensions give myself and others freedom to focus and expand as we see success.

Parting Thoughts

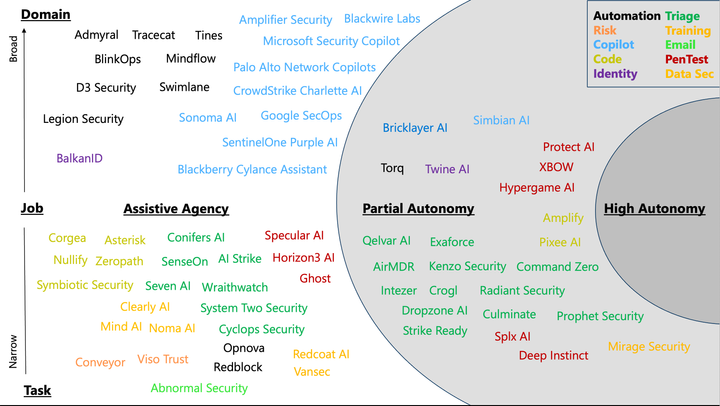

Agents are ushering in the next stage of AI-based solutions, but we should not think of them as monolithic implementations. The more detailed we can be in our discussions, the more learnings we can share and apply. When I look at the security industry, much of what I see are assistive agency solutions, scoped to tasks with basic scale, basic personality and limited interaction. Put more direct, a lot of security solutions are incorporating some form of agentic components in their products today. This is good, but it's not going to get us to a transformed state of security.

What I find more exciting are the AI-first startups who are pushing toward partial autonomy scoped to jobs with more proficient scale. Companies that come to mind in this area are ones like Dropzone, AirMDR, XBOW, Pixee, Mirage Security and Bricklayer. These implementations are still early, but they are stretching the limits of the technology today and leaning into the notion that costs will come down, speeds will improve and that the technology will become more capable. This is the sort of thinking we need as an industry because what we've been doing struggles to scale and feels limited.

Special thanks to Sunder Srinivasan, Alexander Stojanovic and others in Microsoft Security Research for offering feedback and refinement.