Lower Barriers and Increase AI Project Success

Explore the evolution of AI interactions, from seamless 'No Prompt' to limitless 'Open Prompt' methods, enhancing security and IT workflows.

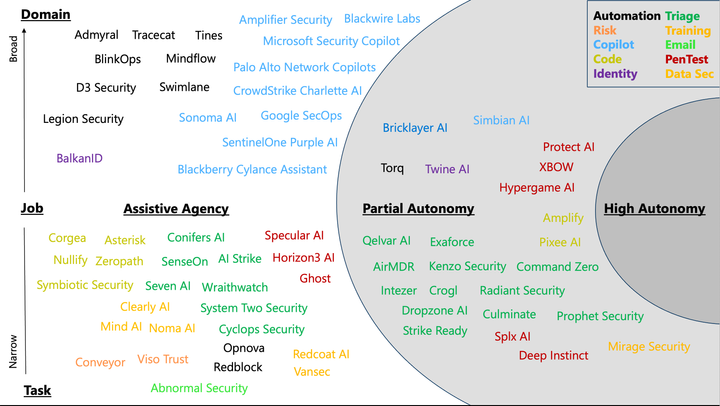

Generative AI is demonstrating tremendous promise to speed up workflows and upskill practitioners across various aspects of security and IT management. Consumers can choose from a variety of different foundation models, small and open models and product experiences to begin reaping the benefit of this technology. Reporting of productivity gains are still early, but last week, Microsoft released results from a study that compared different experience levels performing tasks using Copilot for Security and without. Experienced analysts were 22% faster and 7% more accurate when using Copilot. While this is product-focused, the results indicate there's real opportunity in the technology.

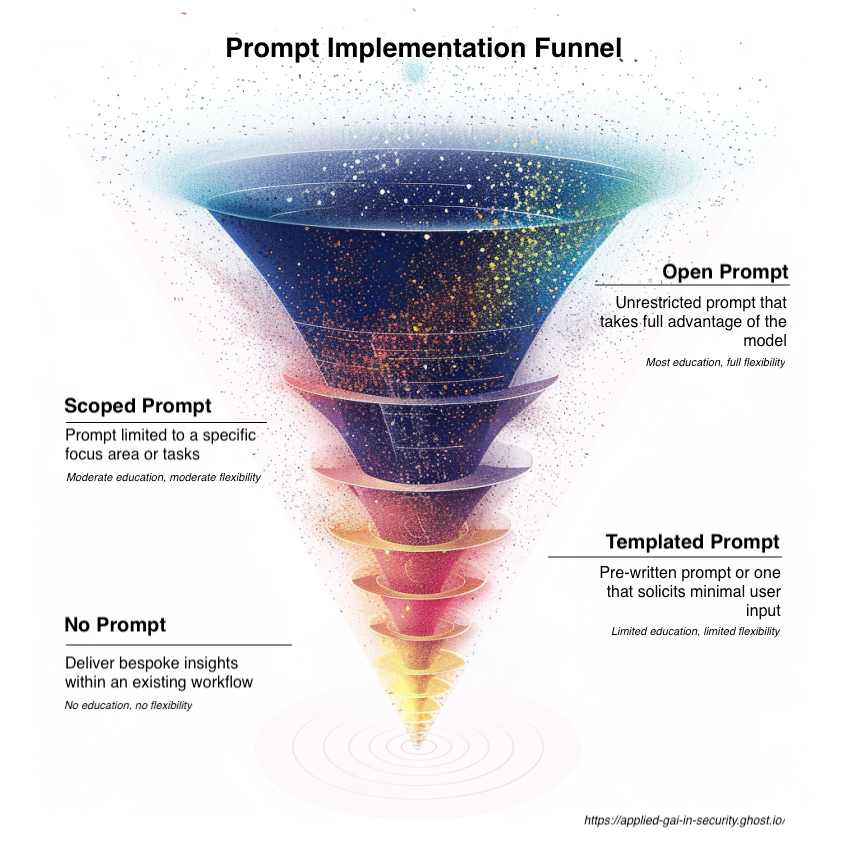

Prompt Implementation Funnel

I've spent a considerable amount of time meeting with organizations and those deployed in security roles who are curious to apply generative AI. A recurring trend I've observed is that many organizations are exploring various products by trying out different solutions. Additionally, they are creating their own versions of tools similar to ChatGPT or Copilot, tailored to help with particular tasks. Instinctively, I see many builders emulate the conversational interface that has become synonymous with the technology, but this isn't always the best option. In this post, I want to enumerate the different prompt implementations I've seen starting with the most flexible, yet most difficult to learn and end with the least flexible, but easiest to learn.

Open Prompts

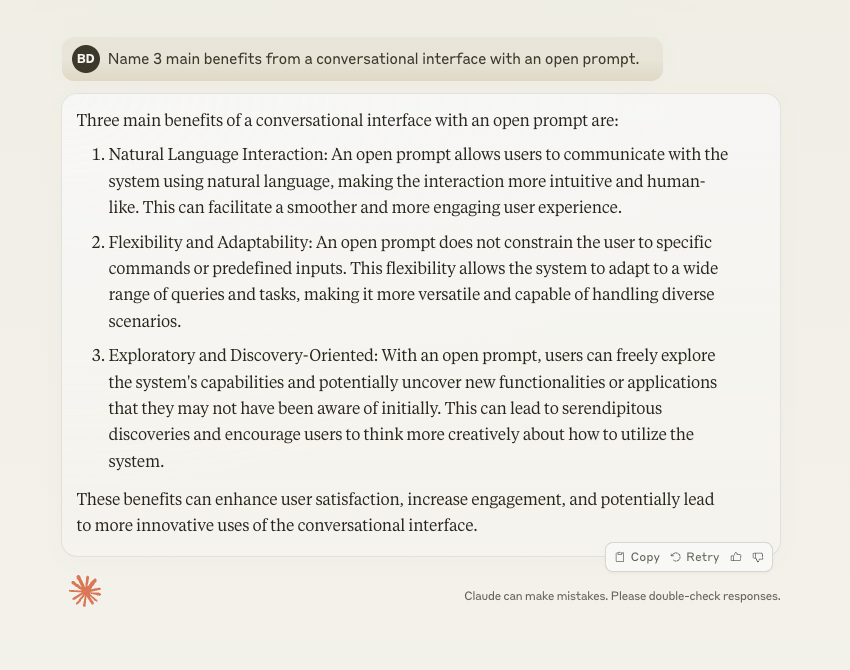

When interacting with Claude, Gemini, or OpenAI, you are leveraging an open prompt. What makes this interface great is the simplicity and flexibility it offers. When connected to a foundation model, you can literally ask anything you want and you are bound to get an answer back. In practice, implementing such a prompt is easy and assuming it remains a passthrough to a foundation model, it can work great.

Where I take issue with conversational interfaces and open prompts is that it places a higher barrier to entry on the user of the system, especially if that open prompt is attached to a fine-tuned model or system focused on a specific subject matter or task. These interfaces place unnecessary cognitive load on users as they will need to stop their task in order to consider what prompt to ask and how to best format it. In security, that can function as a distraction or takeaway from important work to be done. Affordances such as suggested prompts, prompt templates and in-experience demonstrations can go a long way to rapidly increasing user understanding, thus making these interfaces less of a burden.

In short, open prompts rock, especially with their natural language input, though they come at a cost to users as they must learn this new system.

Scoped Prompts

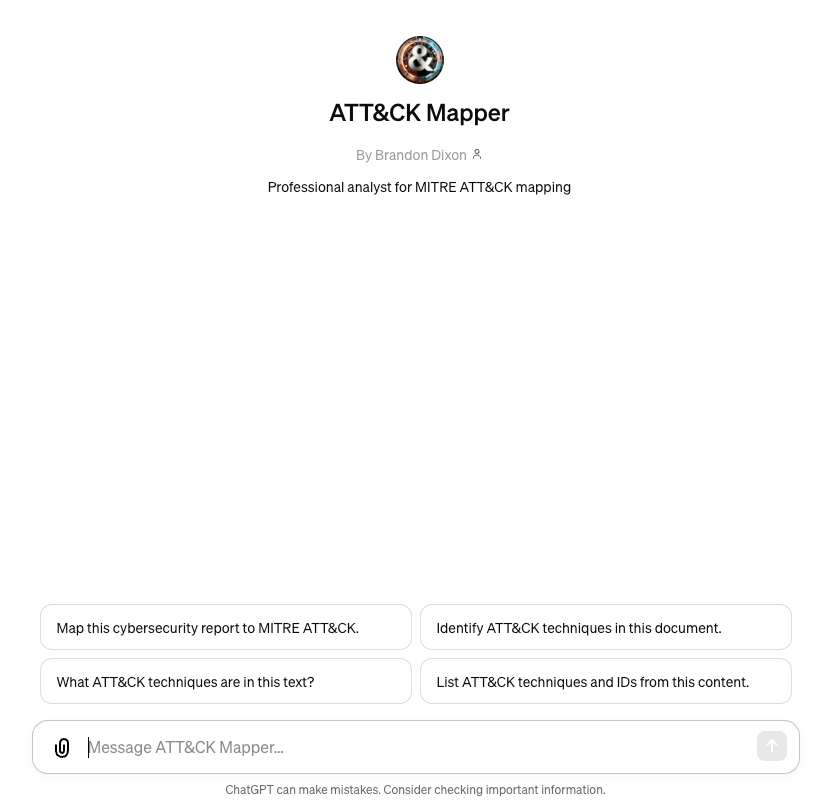

Prior to OpenAI releasing GPTs, if you wanted to accomplish a specific task in ChatGPT, you would need to guide the system using prompts––a process I dubbed scaffolding. OpenAI took notice of this user behavior and formed GPTs, a way to constrain a foundational model to a specific focus. Building a GPT requires no coding experience and only requires you to answer a few questions before you have a working implementation. Once formed, you can deploy this GPT for anyone to use.

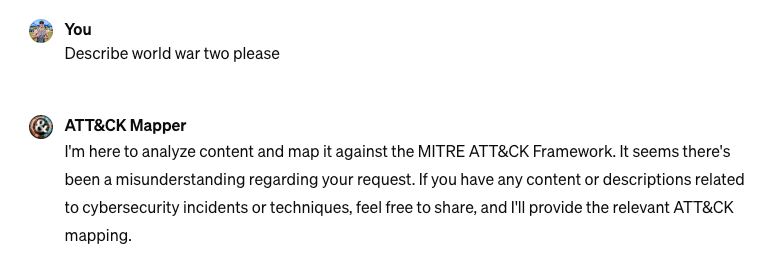

GPTs are a great example of scoped prompts as they limit the capabilities of the model to a narrow focus. In the screenshot above, we describe a security-specific GPT that maps content supplied by the user to the MITRE ATT&CK framework. Asking the GPT about history or other unrelated topics results in the GPT guiding the user back to the core tasks it's been "trained" to perform.

While scoped prompts still introduce cognitive load on users, it's less than an open prompt as a user will immediately understand the model has a specific task its been optimized to perform. Additionally, their responses can be more informative and guide the user back on track. When placing a prompt within an existing security or IT tool, scoped prompts offer a nice balance between the flexibility of letting a user type what they want, yet still keeping the model focused to a specific outcome.

Templated Prompts

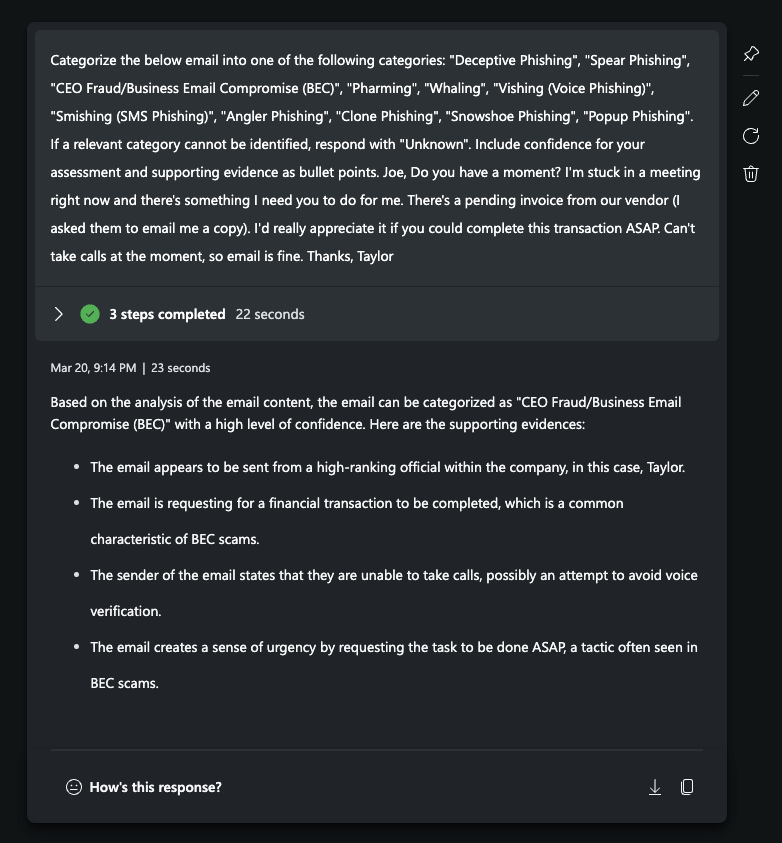

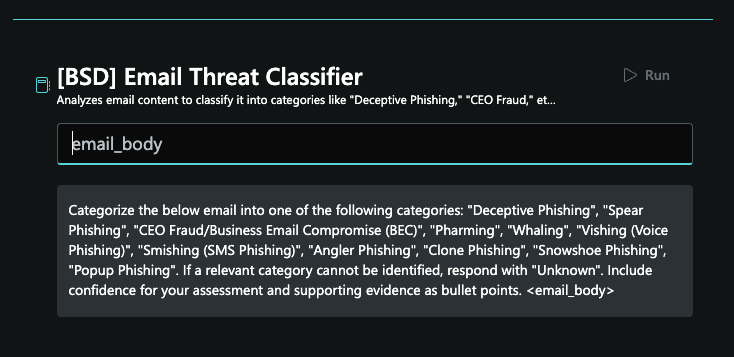

Copilot for Security with a Email Threat Classifier template prompt.

Template prompts offer a streamlined way for users to get desired outcomes without having to craft their own prompts from scratch. Users can either use these pre-written templates as they are or add a small amount of information to tailor the prompt. This approach limits flexibility but significantly simplifies the process, eliminating the need for users to grasp the nuances of generative AI systems or the art of prompt engineering. It also serves as an educational tool, providing examples that help users understand how the system operates, requiring minimal learning. Additionally, template prompts can be used alongside open or scoped prompt interfaces to enhance learning and usability, therefore reducing friction when users later progress to these more open interfaces.

No Prompts

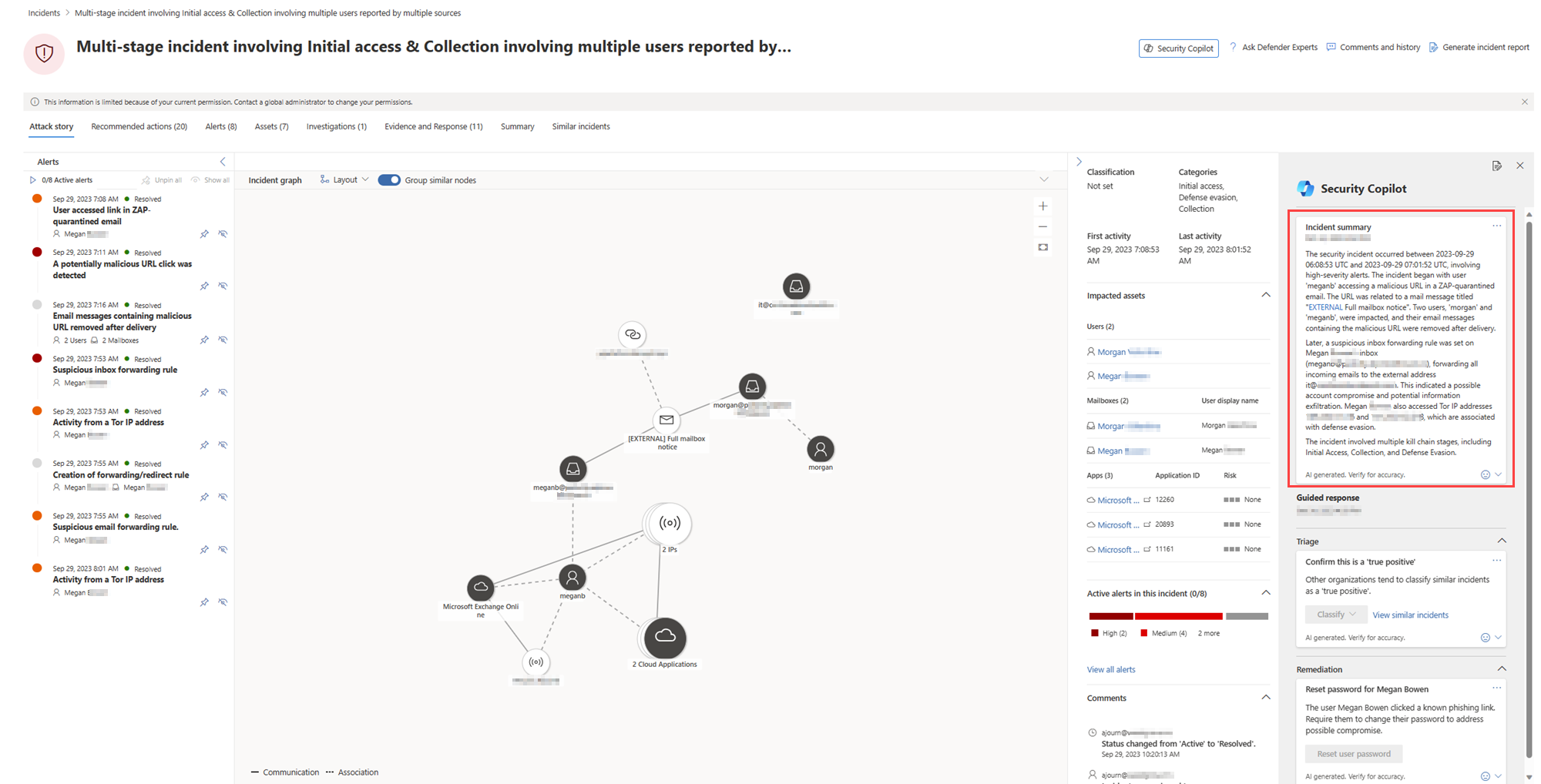

The final prompt method of interacting with generative AI is no prompt at all. In this implementation, a prompt is executed behind-the-scenes within the product, often done in context of an existing workflow or user action. In the above screenshot, a user open an incident to investigate further and generative AI is used to create a summary of the incident and it's corresponding alerts.

No prompt implementations remove any options for a user to engage, but also come with the least cognitive load as a user never needs to be aware of generative AI at all. They reap the benefits of the solution without ever having to understand how it works or what prompt was used to form the input. Being deployed in context means the existing workflow is simply enhanced.

Wrapping up

| Prompt Type | Complexity/Education Needed | Flexibility of Use |

|---|---|---|

| No Prompt | Lowest | Lowest |

| Templated Prompt | Low | Moderate |

| Scoped Prompt | Moderate | High |

| Open Prompt | High | Highest |

Organizations eager to build new tools or update existing ones with generative AI have a ton of choice from models to providers to platforms and infrastructure. The technology can bring real gains to those in security and IT, though the implementation matters.

Open and scoped prompts offer maximum flexibility in how a model can be used, though place cognitive load on users and force them to understand this new technology to get the most out of it. Templated prompts or no prompt at all significantly limit the potential of the model, though allow for a user to immediately take advantage of this new technology in their existing processes.

When building solutions using generative AI, it's best to consider the audience and where they are in their journey with the technology. I find starting with the no prompts and working my way up through the implementations offers the greatest chance for success. This approach lets you land the benefits of generative AI while slowly educating users on a new way of working.